Nipype on Neurodesk#

An interactive RISE slideshow#

Author: Monika Doerig

Press Space to proceed through the slideshow.

Set up Neurodesk#

In code cells you press Shift-Enter (as usual) to evaluate your code and directly move to the next cell if it is already displayed.

Press Ctrl-Enter to run a command without direclty moving to the next cell.

%%capture

import os

import sys

IN_COLAB = 'google.colab' in sys.modules

if IN_COLAB:

os.environ["LD_PRELOAD"] = "";

os.environ["APPTAINER_BINDPATH"] = "/content,/tmp,/cvmfs"

os.environ["MPLCONFIGDIR"] = "/content/matplotlib-mpldir"

os.environ["LMOD_CMD"] = "/usr/share/lmod/lmod/libexec/lmod"

!curl -J -O https://raw.githubusercontent.com/NeuroDesk/neurocommand/main/googlecolab_setup.sh

!chmod +x googlecolab_setup.sh

!./googlecolab_setup.sh

os.environ["MODULEPATH"] = ':'.join(map(str, list(map(lambda x: os.path.join(os.path.abspath('/cvmfs/neurodesk.ardc.edu.au/neurodesk-modules/'), x),os.listdir('/cvmfs/neurodesk.ardc.edu.au/neurodesk-modules/')))))

# Output CPU information:

!cat /proc/cpuinfo | grep 'vendor' | uniq

!cat /proc/cpuinfo | grep 'model name' | uniq

vendor_id : AuthenticAMD

model name : AMD EPYC-Rome Processor

Keep pressing Space to advance to the next slide.

Objectives

- Know the basics of Nipype

- And how to use it on Neurodesk

- Learn how Python can be applied to analyze neuroimaging data through practical examples

- Get pointers to resources

Be aware ...

- Nipype is part of a large ecosystem

- Therefore, it is about knowing what is out there and empowering you with new tools

- Sometimes, the devil is in the details

- Things take time

Table of content#

1. Introduction to Nipype

2. Nipype in Jupyter Notebooks on Neurodesk

3. Exploration of Nipype’s building blocks

4. Pydra: A modern dataflow engine developed for the Nipype project

1. Introduction to Nipype#

Open-source Python project that originated within the neuroimaging community

Provides a unified interface to diverse neuroimaging packages including ANTS, SPM, FSL, FreeSurfer, and others

Facilitates seamless interaction between these packages

Its flexibility has made it a preferred basis for widely used pre-processing tools such as fMRIPrep

\(\rightarrow\) A primary goal driving Nipype is to simplify the integration of various analysis packages, allowing for the utilization of algorithms that are most appropriate for specific problems.

2. Nipype in Jupyter Notebooks on Neurodesk#

Neurodesk project enables the use of all neuroimaging applications inside computational notebooks

Demonstration of the module system in Python and Nipype:

We will use the software tool lmod to manage and load different software packages and libraires. It simplifies the process of accessing and utilizing various software applications and allows users to easily switch between different versions of software packages, manage dependencies, and ensure compatibility with their computing environment.

# In code cells you press Shift-Enter to evaluate your code and directly move to the next cell if it is already displayed.

# Or press Ctrl-Enter to run a command without direclty moving to the next cell.

# Use lmod to load any software tool with a specific version

import lmod

await lmod.load('fsl/6.0.7.4')

await lmod.list()

['Lmod',

'Warning:',

'The',

'environment',

'MODULEPATH',

'has',

'been',

'changed',

'in',

'unexpected',

'ways.',

'Lmod',

'is',

'unable',

'to',

'use',

'given',

'MODULEPATH.',

'It',

'is',

'using:',

'"/cvmfs/neurodesk.ardc.edu.au/neurodesk-modules/functional_imaging:/cvmfs/neurodesk.ardc.edu.au/neurodesk-modules/rodent_imaging:/cvmfs/neurodesk.ardc.edu.au/neurodesk-modules/image_registration:/cvmfs/neurodesk.ardc.edu.au/neurodesk-modules/structural_imaging:/cvmfs/neurodesk.ardc.edu.au/neurodesk-modules/image_segmentation:/cvmfs/neurodesk.ardc.edu.au/neurodesk-modules/quantitative_imaging:/cvmfs/neurodesk.ardc.edu.au/neurodesk-modules/workflows:/cvmfs/neurodesk.ardc.edu.au/neurodesk-modules/hippocampus:/cvmfs/neurodesk.ardc.edu.au/neurodesk-modules/image_reconstruction:/cvmfs/neurodesk.ardc.edu.au/neurodesk-modules/data_organisation:/cvmfs/neurodesk.ardc.edu.au/neurodesk-modules/electrophysiology:/cvmfs/neurodesk.ardc.edu.au/neurodesk-modules/phase_processing:/cvmfs/neurodesk.ardc.edu.au/neurodesk-modules/programming:/cvmfs/neurodesk.ardc.edu.au/neurodesk-modules/machine_learning:/cvmfs/neurodesk.ardc.edu.au/neurodesk-modules/diffusion_imaging:/cvmfs/neurodesk.ardc.edu.au/neurodesk-modules/body:/cvmfs/neurodesk.ardc.edu.au/neurodesk-modules/visualization:/cvmfs/neurodesk.ardc.edu.au/neurodesk-modules/spectroscopy:/cvmfs/neurodesk.ardc.edu.au/neurodesk-modules/quality_control:/cvmfs/neurodesk.ardc.edu.au/neurodesk-modules/statistics:/cvmfs/neurodesk.ardc.edu.au/neurodesk-modules/shape_analysis:/cvmfs/neurodesk.ardc.edu.au/neurodesk-modules/spine:/cvmfs/neurodesk.ardc.edu.au/neurodesk-modules/molecular_biology:/cvmfs/neurodesk.ardc.edu.au/neurodesk-modules/bids_apps:/cvmfs/neurodesk.ardc.edu.au/neurodesk-modules/cryo_EM:/cvmfs/neurodesk.ardc.edu.au/neurodesk-modules/_diffusion_imaging:/cvmfs/neurodesk.ardc.edu.au/neurodesk-modules/_functional_imaging:/cvmfs/neurodesk.ardc.edu.au/neurodesk-modules/_workflows::".',

'Please',

'use',

'"module',

'use',

'to',

'change',

'MODULEPATH',

'instead.',

'fsl/6.0.7.4']

import os

os.environ["FSLOUTPUTTYPE"]="NIFTI_GZ" # Default is NIFTI

from nipype.interfaces.fsl.base import Info

print(Info.version())

print(Info.output_type())

# If the FSL version is changed using lmod above, the kernel of the notebook needs to be restarted!

6.0.7.4

NIFTI_GZ

# Load afni and spm as well

await lmod.load('afni/22.3.06')

await lmod.load('spm12/r7771')

await lmod.list()

Lmod Warning: The environment MODULEPATH has been changed in unexpected ways.

Lmod is unable to use given MODULEPATH. It is using:

"/cvmfs/neurodesk.ardc.edu.au/neurodesk-modules/functional_imaging:/cvmfs/neurodesk.ardc.edu.au/neurodesk-modules/rodent_imaging:/cvmfs/neurodesk.ardc.edu.au/neurodesk-modules/image_registration:/cvmfs/neurodesk.ardc.edu.au/neurodesk-modules/structural_imaging:/cvmfs/neurodesk.ardc.edu.au/neurodesk-modules/image_segmentation:/cvmfs/neurodesk.ardc.edu.au/neurodesk-modules/quantitative_imaging:/cvmfs/neurodesk.ardc.edu.au/neurodesk-modules/workflows:/cvmfs/neurodesk.ardc.edu.au/neurodesk-modules/hippocampus:/cvmfs/neurodesk.ardc.edu.au/neurodesk-modules/image_reconstruction:/cvmfs/neurodesk.ardc.edu.au/neurodesk-modules/data_organisation:/cvmfs/neurodesk.ardc.edu.au/neurodesk-modules/electrophysiology:/cvmfs/neurodesk.ardc.edu.au/neurodesk-modules/phase_processing:/cvmfs/neurodesk.ardc.edu.au/neurodesk-modules/programming:/cvmfs/neurodesk.ardc.edu.au/neurodesk-modules/machine_learning:/cvmfs/neurodesk.ardc.edu.au/neurodesk-modules/diffusion_imaging:/cvmfs/neurodesk.ardc.edu.au/neurodesk-modules/body:/cvmfs/neurodesk.ardc.edu.au/neurodesk-modules/visualization:/cvmfs/neurodesk.ardc.edu.au/neurodesk-modules/spectroscopy:/cvmfs/neurodesk.ardc.edu.au/neurodesk-modules/quality_control:/cvmfs/neurodesk.ardc.edu.au/neurodesk-modules/statistics:/cvmfs/neurodesk.ardc.edu.au/neurodesk-modules/shape_analysis:/cvmfs/neurodesk.ardc.edu.au/neurodesk-modules/spine:/cvmfs/neurodesk.ardc.edu.au/neurodesk-modules/molecular_biology:/cvmfs/neurodesk.ardc.edu.au/neurodesk-modules/bids_apps:/cvmfs/neurodesk.ardc.edu.au/neurodesk-modules/cryo_EM:/cvmfs/neurodesk.ardc.edu.au/neurodesk-modules/_diffusion_imaging:/cvmfs/neurodesk.ardc.edu.au/neurodesk-modules/_functional_imaging:/cvmfs/neurodesk.ardc.edu.au/neurodesk-modules/_workflows::".

Please use "module use ..." to change MODULEPATH instead.

Lmod Warning: The environment MODULEPATH has been changed in unexpected ways.

Lmod is unable to use given MODULEPATH. It is using:

"/cvmfs/neurodesk.ardc.edu.au/neurodesk-modules/functional_imaging:/cvmfs/neurodesk.ardc.edu.au/neurodesk-modules/rodent_imaging:/cvmfs/neurodesk.ardc.edu.au/neurodesk-modules/image_registration:/cvmfs/neurodesk.ardc.edu.au/neurodesk-modules/structural_imaging:/cvmfs/neurodesk.ardc.edu.au/neurodesk-modules/image_segmentation:/cvmfs/neurodesk.ardc.edu.au/neurodesk-modules/quantitative_imaging:/cvmfs/neurodesk.ardc.edu.au/neurodesk-modules/workflows:/cvmfs/neurodesk.ardc.edu.au/neurodesk-modules/hippocampus:/cvmfs/neurodesk.ardc.edu.au/neurodesk-modules/image_reconstruction:/cvmfs/neurodesk.ardc.edu.au/neurodesk-modules/data_organisation:/cvmfs/neurodesk.ardc.edu.au/neurodesk-modules/electrophysiology:/cvmfs/neurodesk.ardc.edu.au/neurodesk-modules/phase_processing:/cvmfs/neurodesk.ardc.edu.au/neurodesk-modules/programming:/cvmfs/neurodesk.ardc.edu.au/neurodesk-modules/machine_learning:/cvmfs/neurodesk.ardc.edu.au/neurodesk-modules/diffusion_imaging:/cvmfs/neurodesk.ardc.edu.au/neurodesk-modules/body:/cvmfs/neurodesk.ardc.edu.au/neurodesk-modules/visualization:/cvmfs/neurodesk.ardc.edu.au/neurodesk-modules/spectroscopy:/cvmfs/neurodesk.ardc.edu.au/neurodesk-modules/quality_control:/cvmfs/neurodesk.ardc.edu.au/neurodesk-modules/statistics:/cvmfs/neurodesk.ardc.edu.au/neurodesk-modules/shape_analysis:/cvmfs/neurodesk.ardc.edu.au/neurodesk-modules/spine:/cvmfs/neurodesk.ardc.edu.au/neurodesk-modules/molecular_biology:/cvmfs/neurodesk.ardc.edu.au/neurodesk-modules/bids_apps:/cvmfs/neurodesk.ardc.edu.au/neurodesk-modules/cryo_EM:/cvmfs/neurodesk.ardc.edu.au/neurodesk-modules/_diffusion_imaging:/cvmfs/neurodesk.ardc.edu.au/neurodesk-modules/_functional_imaging:/cvmfs/neurodesk.ardc.edu.au/neurodesk-modules/_workflows::".

Please use "module use ..." to change MODULEPATH instead.

['Lmod',

'Warning:',

'The',

'environment',

'MODULEPATH',

'has',

'been',

'changed',

'in',

'unexpected',

'ways.',

'Lmod',

'is',

'unable',

'to',

'use',

'given',

'MODULEPATH.',

'It',

'is',

'using:',

'"/cvmfs/neurodesk.ardc.edu.au/neurodesk-modules/functional_imaging:/cvmfs/neurodesk.ardc.edu.au/neurodesk-modules/rodent_imaging:/cvmfs/neurodesk.ardc.edu.au/neurodesk-modules/image_registration:/cvmfs/neurodesk.ardc.edu.au/neurodesk-modules/structural_imaging:/cvmfs/neurodesk.ardc.edu.au/neurodesk-modules/image_segmentation:/cvmfs/neurodesk.ardc.edu.au/neurodesk-modules/quantitative_imaging:/cvmfs/neurodesk.ardc.edu.au/neurodesk-modules/workflows:/cvmfs/neurodesk.ardc.edu.au/neurodesk-modules/hippocampus:/cvmfs/neurodesk.ardc.edu.au/neurodesk-modules/image_reconstruction:/cvmfs/neurodesk.ardc.edu.au/neurodesk-modules/data_organisation:/cvmfs/neurodesk.ardc.edu.au/neurodesk-modules/electrophysiology:/cvmfs/neurodesk.ardc.edu.au/neurodesk-modules/phase_processing:/cvmfs/neurodesk.ardc.edu.au/neurodesk-modules/programming:/cvmfs/neurodesk.ardc.edu.au/neurodesk-modules/machine_learning:/cvmfs/neurodesk.ardc.edu.au/neurodesk-modules/diffusion_imaging:/cvmfs/neurodesk.ardc.edu.au/neurodesk-modules/body:/cvmfs/neurodesk.ardc.edu.au/neurodesk-modules/visualization:/cvmfs/neurodesk.ardc.edu.au/neurodesk-modules/spectroscopy:/cvmfs/neurodesk.ardc.edu.au/neurodesk-modules/quality_control:/cvmfs/neurodesk.ardc.edu.au/neurodesk-modules/statistics:/cvmfs/neurodesk.ardc.edu.au/neurodesk-modules/shape_analysis:/cvmfs/neurodesk.ardc.edu.au/neurodesk-modules/spine:/cvmfs/neurodesk.ardc.edu.au/neurodesk-modules/molecular_biology:/cvmfs/neurodesk.ardc.edu.au/neurodesk-modules/bids_apps:/cvmfs/neurodesk.ardc.edu.au/neurodesk-modules/cryo_EM:/cvmfs/neurodesk.ardc.edu.au/neurodesk-modules/_diffusion_imaging:/cvmfs/neurodesk.ardc.edu.au/neurodesk-modules/_functional_imaging:/cvmfs/neurodesk.ardc.edu.au/neurodesk-modules/_workflows::".',

'Please',

'use',

'"module',

'use',

'to',

'change',

'MODULEPATH',

'instead.',

'fsl/6.0.7.4',

'afni/22.3.06',

'spm12/r7771']

3. Exploration of Nipype’s building blocks#

Interfaces: Wraps a program/ function

Workflow engine:

Nodes: Wraps an interface for use in a workflow

Workflows: A directed graph or forest of graphs whose edges represent data flow

Data Input: Many different modules to grab/ select data depending on the data structure

Data Output: Different modules to handle data stream output

Plugin: A component that describes how a Workflow should be executed

Preparation: Download of opensource data, installations and imports#

# Download 2 subjects of the Flanker Dataset

PATTERN = "sub-0[1-2]"

!datalad install https://github.com/OpenNeuroDatasets/ds000102.git

!cd ds000102 && datalad get $PATTERN

Clone attempt: 0%| | 0.00/2.00 [00:00<?, ? Candidate locations/s]

Enumerating: 0.00 Objects [00:00, ? Objects/s]

Counting: 0%| | 0.00/27.0 [00:00<?, ? Objects/s]

Compressing: 0%| | 0.00/23.0 [00:00<?, ? Objects/s]

Receiving: 0%| | 0.00/2.15k [00:00<?, ? Objects/s]

Resolving: 0%| | 0.00/537 [00:00<?, ? Deltas/s]

[INFO ] scanning for unlocked files (this may take some time)

[INFO ] Remote origin not usable by git-annex; setting annex-ignore

[INFO ] access to 1 dataset sibling s3-PRIVATE not auto-enabled, enable with:

| datalad siblings -d "/storage/tmp/tmpm_b9rmze/ds000102" enable -s s3-PRIVATE

install(ok): /storage/tmp/tmpm_b9rmze/ds000102 (dataset)

Total: 0%| | 0.00/136M [00:00<?, ? Bytes/s]

Get sub-01/a .. 1_T1w.nii.gz: 0%| | 0.00/10.6M [00:00<?, ? Bytes/s]

Get sub-01/a .. 1_T1w.nii.gz: 0%| | 32.2k/10.6M [00:00<02:20, 74.8k Bytes/s]

Get sub-01/a .. 1_T1w.nii.gz: 0%| | 49.6k/10.6M [00:00<02:28, 71.1k Bytes/s]

Get sub-01/a .. 1_T1w.nii.gz: 1%| | 67.1k/10.6M [00:00<02:23, 73.2k Bytes/s]

Get sub-01/a .. 1_T1w.nii.gz: 1%| | 84.5k/10.6M [00:01<03:07, 56.0k Bytes/s]

Get sub-01/a .. 1_T1w.nii.gz: 1%| | 119k/10.6M [00:01<02:43, 64.2k Bytes/s]

Get sub-01/a .. 1_T1w.nii.gz: 1%| | 137k/10.6M [00:02<02:35, 67.0k Bytes/s]

Get sub-01/a .. 1_T1w.nii.gz: 1%| | 154k/10.6M [00:02<02:30, 69.4k Bytes/s]

Get sub-01/a .. 1_T1w.nii.gz: 2%| | 172k/10.6M [00:02<02:27, 70.4k Bytes/s]

Get sub-01/a .. 1_T1w.nii.gz: 2%| | 189k/10.6M [00:02<02:22, 73.0k Bytes/s]

Get sub-01/a .. 1_T1w.nii.gz: 2%| | 206k/10.6M [00:02<02:18, 75.1k Bytes/s]

Get sub-01/a .. 1_T1w.nii.gz: 2%| | 224k/10.6M [00:03<01:59, 86.4k Bytes/s]

Get sub-01/a .. 1_T1w.nii.gz: 2%| | 241k/10.6M [00:03<01:43, 99.6k Bytes/s]

Get sub-01/a .. 1_T1w.nii.gz: 3%|▏ | 276k/10.6M [00:03<01:26, 119k Bytes/s]

Get sub-01/a .. 1_T1w.nii.gz: 3%|▏ | 293k/10.6M [00:03<01:29, 115k Bytes/s]

Get sub-01/a .. 1_T1w.nii.gz: 3%|▏ | 346k/10.6M [00:03<01:12, 142k Bytes/s]

Get sub-01/a .. 1_T1w.nii.gz: 4%|▏ | 380k/10.6M [00:04<01:08, 149k Bytes/s]

Get sub-01/a .. 1_T1w.nii.gz: 4%|▏ | 450k/10.6M [00:04<00:52, 194k Bytes/s]

Get sub-01/a .. 1_T1w.nii.gz: 5%|▏ | 502k/10.6M [00:04<00:48, 207k Bytes/s]

Get sub-01/a .. 1_T1w.nii.gz: 5%|▎ | 572k/10.6M [00:04<00:42, 235k Bytes/s]

Get sub-01/a .. 1_T1w.nii.gz: 6%|▎ | 659k/10.6M [00:05<00:35, 280k Bytes/s]

Get sub-01/a .. 1_T1w.nii.gz: 7%|▎ | 746k/10.6M [00:05<00:31, 311k Bytes/s]

Get sub-01/a .. 1_T1w.nii.gz: 8%|▍ | 833k/10.6M [00:05<00:28, 336k Bytes/s]

Get sub-01/a .. 1_T1w.nii.gz: 9%|▍ | 937k/10.6M [00:05<00:22, 428k Bytes/s]

Get sub-01/a .. 1_T1w.nii.gz: 10%|▍ | 1.08M/10.6M [00:05<00:22, 425k Bytes/s]

Get sub-01/a .. 1_T1w.nii.gz: 11%|▍ | 1.20M/10.6M [00:06<00:19, 493k Bytes/s]

Get sub-01/a .. 1_T1w.nii.gz: 13%|▌ | 1.34M/10.6M [00:06<00:14, 636k Bytes/s]

Get sub-01/a .. 1_T1w.nii.gz: 14%|▌ | 1.51M/10.6M [00:06<00:16, 542k Bytes/s]

Get sub-01/a .. 1_T1w.nii.gz: 16%|▋ | 1.70M/10.6M [00:06<00:12, 740k Bytes/s]

Get sub-01/a .. 1_T1w.nii.gz: 18%|▋ | 1.91M/10.6M [00:07<00:12, 672k Bytes/s]

Get sub-01/a .. 1_T1w.nii.gz: 20%|▊ | 2.14M/10.6M [00:07<00:11, 762k Bytes/s]

Get sub-01/a .. 1_T1w.nii.gz: 23%|▉ | 2.38M/10.6M [00:07<00:09, 851k Bytes/s]

Get sub-01/a .. 1_T1w.nii.gz: 25%|▉ | 2.64M/10.6M [00:07<00:08, 938k Bytes/s]

Get sub-01/a .. 1_T1w.nii.gz: 28%|▊ | 2.94M/10.6M [00:07<00:07, 1.04M Bytes/s]

Get sub-01/a .. 1_T1w.nii.gz: 31%|▉ | 3.24M/10.6M [00:08<00:05, 1.28M Bytes/s]

Get sub-01/a .. 1_T1w.nii.gz: 34%|█ | 3.58M/10.6M [00:08<00:05, 1.20M Bytes/s]

Get sub-01/a .. 1_T1w.nii.gz: 37%|█ | 3.95M/10.6M [00:08<00:04, 1.41M Bytes/s]

Get sub-01/a .. 1_T1w.nii.gz: 41%|█▏ | 4.30M/10.6M [00:08<00:03, 1.74M Bytes/s]

Get sub-01/a .. 1_T1w.nii.gz: 45%|█▎ | 4.77M/10.6M [00:09<00:03, 1.47M Bytes/s]

Get sub-01/a .. 1_T1w.nii.gz: 49%|█▍ | 5.24M/10.6M [00:09<00:03, 1.63M Bytes/s]

Get sub-01/a .. 1_T1w.nii.gz: 54%|█▋ | 5.74M/10.6M [00:09<00:02, 1.80M Bytes/s]

Get sub-01/a .. 1_T1w.nii.gz: 59%|█▊ | 6.26M/10.6M [00:09<00:02, 2.07M Bytes/s]

Get sub-01/a .. 1_T1w.nii.gz: 64%|█▉ | 6.77M/10.6M [00:09<00:01, 2.55M Bytes/s]

Get sub-01/a .. 1_T1w.nii.gz: 70%|██ | 7.40M/10.6M [00:10<00:01, 2.49M Bytes/s]

Get sub-01/a .. 1_T1w.nii.gz: 76%|██▎| 8.07M/10.6M [00:10<00:00, 2.60M Bytes/s]

Get sub-01/a .. 1_T1w.nii.gz: 83%|██▍| 8.78M/10.6M [00:10<00:00, 2.62M Bytes/s]

Get sub-01/a .. 1_T1w.nii.gz: 90%|██▋| 9.53M/10.6M [00:10<00:00, 2.53M Bytes/s]

Get sub-01/a .. 1_T1w.nii.gz: 97%|██▉| 10.3M/10.6M [00:11<00:00, 3.22M Bytes/s]

Total: 8%|██▏ | 10.6M/136M [00:12<02:30, 830k Bytes/s]

Get sub-01/f .. _bold.nii.gz: 0%| | 0.00/28.1M [00:00<?, ? Bytes/s]

Get sub-01/f .. _bold.nii.gz: 3%| | 846k/28.1M [00:00<00:04, 5.56M Bytes/s]

Get sub-01/f .. _bold.nii.gz: 6%|▏ | 1.77M/28.1M [00:00<00:06, 3.85M Bytes/s]

Get sub-01/f .. _bold.nii.gz: 10%|▎ | 2.70M/28.1M [00:00<00:06, 4.08M Bytes/s]

Get sub-01/f .. _bold.nii.gz: 13%|▍ | 3.67M/28.1M [00:00<00:04, 5.33M Bytes/s]

Get sub-01/f .. _bold.nii.gz: 17%|▌ | 4.79M/28.1M [00:01<00:05, 4.08M Bytes/s]

Get sub-01/f .. _bold.nii.gz: 21%|▋ | 5.90M/28.1M [00:01<00:05, 4.35M Bytes/s]

Get sub-01/f .. _bold.nii.gz: 25%|▊ | 7.10M/28.1M [00:01<00:04, 4.62M Bytes/s]

Get sub-01/f .. _bold.nii.gz: 30%|▉ | 8.34M/28.1M [00:01<00:04, 4.90M Bytes/s]

Get sub-01/f .. _bold.nii.gz: 34%|█ | 9.67M/28.1M [00:02<00:03, 5.17M Bytes/s]

Get sub-01/f .. _bold.nii.gz: 39%|█▏ | 11.0M/28.1M [00:02<00:02, 6.31M Bytes/s]

Get sub-01/f .. _bold.nii.gz: 45%|█▎ | 12.5M/28.1M [00:02<00:02, 5.54M Bytes/s]

Get sub-01/f .. _bold.nii.gz: 50%|█▍ | 14.0M/28.1M [00:02<00:02, 6.33M Bytes/s]

Get sub-01/f .. _bold.nii.gz: 56%|█▋ | 15.7M/28.1M [00:02<00:02, 6.11M Bytes/s]

Get sub-01/f .. _bold.nii.gz: 61%|█▊ | 17.0M/28.1M [00:03<00:01, 7.25M Bytes/s]

Get sub-01/f .. _bold.nii.gz: 66%|█▉ | 18.5M/28.1M [00:03<00:01, 7.15M Bytes/s]

Get sub-01/f .. _bold.nii.gz: 73%|██▏| 20.5M/28.1M [00:03<00:00, 7.60M Bytes/s]

Get sub-01/f .. _bold.nii.gz: 76%|██▎| 21.4M/28.1M [00:03<00:00, 7.04M Bytes/s]

Get sub-01/f .. _bold.nii.gz: 82%|██▍| 23.0M/28.1M [00:03<00:00, 7.40M Bytes/s]

Get sub-01/f .. _bold.nii.gz: 86%|██▌| 24.2M/28.1M [00:03<00:00, 8.40M Bytes/s]

Get sub-01/f .. _bold.nii.gz: 93%|██▊| 26.0M/28.1M [00:04<00:00, 8.37M Bytes/s]

Get sub-01/f .. _bold.nii.gz: 96%|██▉| 27.0M/28.1M [00:04<00:00, 7.91M Bytes/s]

Total: 28%|███████▋ | 38.6M/136M [00:17<00:44, 2.19M Bytes/s]

Get sub-01/f .. _bold.nii.gz: 0%| | 0.00/28.1M [00:00<?, ? Bytes/s]

Get sub-01/f .. _bold.nii.gz: 6%|▏ | 1.71M/28.1M [00:00<00:01, 14.0M Bytes/s]

Get sub-01/f .. _bold.nii.gz: 13%|▍ | 3.66M/28.1M [00:00<00:02, 10.8M Bytes/s]

Get sub-01/f .. _bold.nii.gz: 20%|▌ | 5.61M/28.1M [00:00<00:02, 9.64M Bytes/s]

Get sub-01/f .. _bold.nii.gz: 28%|▊ | 7.79M/28.1M [00:00<00:02, 9.62M Bytes/s]

Get sub-01/f .. _bold.nii.gz: 34%|█ | 9.69M/28.1M [00:01<00:02, 9.14M Bytes/s]

Get sub-01/f .. _bold.nii.gz: 41%|█▏ | 11.5M/28.1M [00:01<00:01, 8.81M Bytes/s]

Get sub-01/f .. _bold.nii.gz: 48%|█▍ | 13.4M/28.1M [00:01<00:01, 8.55M Bytes/s]

Get sub-01/f .. _bold.nii.gz: 51%|█▌ | 14.2M/28.1M [00:01<00:01, 8.19M Bytes/s]

Get sub-01/f .. _bold.nii.gz: 56%|█▋ | 15.7M/28.1M [00:01<00:01, 9.40M Bytes/s]

Get sub-01/f .. _bold.nii.gz: 63%|█▉ | 17.6M/28.1M [00:01<00:01, 9.04M Bytes/s]

Get sub-01/f .. _bold.nii.gz: 69%|██ | 19.4M/28.1M [00:02<00:00, 8.81M Bytes/s]

Get sub-01/f .. _bold.nii.gz: 72%|██▏| 20.4M/28.1M [00:02<00:00, 8.34M Bytes/s]

Get sub-01/f .. _bold.nii.gz: 77%|██▎| 21.6M/28.1M [00:02<00:00, 9.19M Bytes/s]

Get sub-01/f .. _bold.nii.gz: 84%|██▌| 23.6M/28.1M [00:02<00:00, 8.97M Bytes/s]

Get sub-01/f .. _bold.nii.gz: 92%|██▊| 25.9M/28.1M [00:02<00:00, 9.36M Bytes/s]

Get sub-01/f .. _bold.nii.gz: 100%|██▉| 28.1M/28.1M [00:03<00:00, 9.46M Bytes/s]

Total: 49%|█████████████▎ | 66.8M/136M [00:21<00:21, 3.15M Bytes/s]

Get sub-02/a .. 2_T1w.nii.gz: 0%| | 0.00/10.7M [00:00<?, ? Bytes/s]

Get sub-02/a .. 2_T1w.nii.gz: 15%|▍ | 1.58M/10.7M [00:00<00:00, 12.8M Bytes/s]

Get sub-02/a .. 2_T1w.nii.gz: 38%|█▏ | 4.11M/10.7M [00:00<00:00, 13.8M Bytes/s]

Get sub-02/a .. 2_T1w.nii.gz: 59%|█▊ | 6.31M/10.7M [00:00<00:00, 9.42M Bytes/s]

Get sub-02/a .. 2_T1w.nii.gz: 79%|██▎| 8.46M/10.7M [00:00<00:00, 9.86M Bytes/s]

Get sub-02/a .. 2_T1w.nii.gz: 89%|██▋| 9.57M/10.7M [00:00<00:00, 10.1M Bytes/s]

Get sub-02/f .. _bold.nii.gz: 0%| | 0.00/29.2M [00:00<?, ? Bytes/s]

Get sub-02/f .. _bold.nii.gz: 9%|▎ | 2.76M/29.2M [00:00<00:01, 13.8M Bytes/s]

Get sub-02/f .. _bold.nii.gz: 16%|▍ | 4.67M/29.2M [00:00<00:02, 9.78M Bytes/s]

Get sub-02/f .. _bold.nii.gz: 21%|▌ | 6.06M/29.2M [00:00<00:02, 10.9M Bytes/s]

Get sub-02/f .. _bold.nii.gz: 29%|▉ | 8.52M/29.2M [00:00<00:01, 10.8M Bytes/s]

Get sub-02/f .. _bold.nii.gz: 36%|█ | 10.4M/29.2M [00:01<00:01, 9.86M Bytes/s]

Get sub-02/f .. _bold.nii.gz: 43%|█▎ | 12.7M/29.2M [00:01<00:01, 9.88M Bytes/s]

Get sub-02/f .. _bold.nii.gz: 51%|█▌ | 14.8M/29.2M [00:01<00:01, 9.60M Bytes/s]

Get sub-02/f .. _bold.nii.gz: 58%|█▋ | 16.9M/29.2M [00:01<00:01, 9.60M Bytes/s]

Get sub-02/f .. _bold.nii.gz: 65%|█▉ | 18.9M/29.2M [00:01<00:01, 9.28M Bytes/s]

Get sub-02/f .. _bold.nii.gz: 70%|██ | 20.4M/29.2M [00:02<00:00, 8.93M Bytes/s]

Get sub-02/f .. _bold.nii.gz: 75%|██▏| 21.8M/29.2M [00:02<00:00, 8.86M Bytes/s]

Get sub-02/f .. _bold.nii.gz: 80%|██▍| 23.2M/29.2M [00:02<00:00, 9.79M Bytes/s]

Get sub-02/f .. _bold.nii.gz: 86%|██▌| 25.2M/29.2M [00:02<00:00, 9.41M Bytes/s]

Get sub-02/f .. _bold.nii.gz: 94%|██▊| 27.4M/29.2M [00:02<00:00, 9.49M Bytes/s]

Total: 79%|█████████████████████▉ | 107M/136M [00:26<00:07, 4.08M Bytes/s]

Get sub-02/f .. _bold.nii.gz: 0%| | 0.00/29.2M [00:00<?, ? Bytes/s]

Get sub-02/f .. _bold.nii.gz: 4%|▏ | 1.31M/29.2M [00:00<00:02, 12.9M Bytes/s]

Get sub-02/f .. _bold.nii.gz: 13%|▍ | 3.71M/29.2M [00:00<00:02, 12.3M Bytes/s]

Get sub-02/f .. _bold.nii.gz: 21%|▌ | 5.99M/29.2M [00:00<00:02, 10.7M Bytes/s]

Get sub-02/f .. _bold.nii.gz: 27%|▊ | 8.02M/29.2M [00:00<00:02, 9.92M Bytes/s]

Get sub-02/f .. _bold.nii.gz: 35%|█ | 10.3M/29.2M [00:00<00:01, 10.0M Bytes/s]

Get sub-02/f .. _bold.nii.gz: 41%|█▏ | 11.9M/29.2M [00:01<00:01, 9.10M Bytes/s]

Get sub-02/f .. _bold.nii.gz: 45%|█▎ | 13.1M/29.2M [00:01<00:01, 8.93M Bytes/s]

Get sub-02/f .. _bold.nii.gz: 50%|█▍ | 14.5M/29.2M [00:01<00:01, 10.0M Bytes/s]

Get sub-02/f .. _bold.nii.gz: 56%|█▋ | 16.3M/29.2M [00:01<00:01, 9.12M Bytes/s]

Get sub-02/f .. _bold.nii.gz: 59%|█▊ | 17.3M/29.2M [00:01<00:01, 8.90M Bytes/s]

Get sub-02/f .. _bold.nii.gz: 63%|█▉ | 18.3M/29.2M [00:01<00:01, 9.12M Bytes/s]

Get sub-02/f .. _bold.nii.gz: 66%|█▉ | 19.4M/29.2M [00:02<00:01, 8.87M Bytes/s]

Get sub-02/f .. _bold.nii.gz: 71%|██▏| 20.7M/29.2M [00:02<00:00, 9.95M Bytes/s]

Get sub-02/f .. _bold.nii.gz: 78%|██▎| 22.9M/29.2M [00:02<00:00, 9.79M Bytes/s]

Get sub-02/f .. _bold.nii.gz: 85%|██▌| 24.9M/29.2M [00:02<00:00, 9.44M Bytes/s]

Get sub-02/f .. _bold.nii.gz: 92%|██▊| 26.8M/29.2M [00:02<00:00, 9.11M Bytes/s]

Get sub-02/f .. _bold.nii.gz: 96%|██▊| 27.9M/29.2M [00:02<00:00, 9.42M Bytes/s]

Get sub-02/f .. _bold.nii.gz: 99%|██▉| 29.0M/29.2M [00:03<00:00, 9.75M Bytes/s]

get(ok): sub-01/anat/sub-01_T1w.nii.gz (file) [from s3-PUBLIC...]

get(ok): sub-01/func/sub-01_task-flanker_run-1_bold.nii.gz (file) [from s3-PUBLIC...]

get(ok): sub-01/func/sub-01_task-flanker_run-2_bold.nii.gz (file) [from s3-PUBLIC...]

get(ok): sub-02/anat/sub-02_T1w.nii.gz (file) [from s3-PUBLIC...]

get(ok): sub-02/func/sub-02_task-flanker_run-1_bold.nii.gz (file) [from s3-PUBLIC...]

get(ok): sub-02/func/sub-02_task-flanker_run-2_bold.nii.gz (file) [from s3-PUBLIC...]

get(ok): sub-01 (directory)

get(ok): sub-02 (directory)

action summary:

get (ok: 8)

! pip install nilearn

Defaulting to user installation because normal site-packages is not writeable

Requirement already satisfied: nilearn in /home/ubuntu/.local/lib/python3.10/site-packages (0.10.2)

Requirement already satisfied: joblib>=1.0.0 in /home/ubuntu/.local/lib/python3.10/site-packages (from nilearn) (1.3.2)

Requirement already satisfied: lxml in /home/ubuntu/.local/lib/python3.10/site-packages (from nilearn) (4.9.4)

Requirement already satisfied: nibabel>=3.2.0 in /home/ubuntu/.local/lib/python3.10/site-packages (from nilearn) (5.2.0)

Requirement already satisfied: numpy>=1.19.0 in /home/ubuntu/.local/lib/python3.10/site-packages (from nilearn) (1.26.2)

Requirement already satisfied: packaging in /usr/lib/python3/dist-packages (from nilearn) (21.3)

Requirement already satisfied: pandas>=1.1.5 in /home/ubuntu/.local/lib/python3.10/site-packages (from nilearn) (2.1.4)

Requirement already satisfied: requests>=2.25.0 in /home/ubuntu/.local/lib/python3.10/site-packages (from nilearn) (2.32.3)

Requirement already satisfied: scikit-learn>=1.0.0 in /home/ubuntu/.local/lib/python3.10/site-packages (from nilearn) (1.3.2)

Requirement already satisfied: scipy>=1.6.0 in /home/ubuntu/.local/lib/python3.10/site-packages (from nilearn) (1.11.4)

Requirement already satisfied: python-dateutil>=2.8.2 in /home/ubuntu/.local/lib/python3.10/site-packages (from pandas>=1.1.5->nilearn) (2.8.2)

Requirement already satisfied: pytz>=2020.1 in /usr/lib/python3/dist-packages (from pandas>=1.1.5->nilearn) (2022.1)

Requirement already satisfied: tzdata>=2022.1 in /home/ubuntu/.local/lib/python3.10/site-packages (from pandas>=1.1.5->nilearn) (2023.3)

Requirement already satisfied: charset-normalizer<4,>=2 in /home/ubuntu/.local/lib/python3.10/site-packages (from requests>=2.25.0->nilearn) (3.3.2)

Requirement already satisfied: idna<4,>=2.5 in /usr/lib/python3/dist-packages (from requests>=2.25.0->nilearn) (3.3)

Requirement already satisfied: urllib3<3,>=1.21.1 in /usr/lib/python3/dist-packages (from requests>=2.25.0->nilearn) (1.26.5)

Requirement already satisfied: certifi>=2017.4.17 in /usr/lib/python3/dist-packages (from requests>=2.25.0->nilearn) (2020.6.20)

Requirement already satisfied: threadpoolctl>=2.0.0 in /home/ubuntu/.local/lib/python3.10/site-packages (from scikit-learn>=1.0.0->nilearn) (3.2.0)

Requirement already satisfied: six>=1.5 in /usr/lib/python3/dist-packages (from python-dateutil>=2.8.2->pandas>=1.1.5->nilearn) (1.16.0)

WARNING: Error parsing dependencies of distro-info: Invalid version: '1.1build1'

WARNING: Error parsing dependencies of python-debian: Invalid version: '0.1.43ubuntu1'

from nipype import Node, Workflow, DataGrabber, DataSink

from nipype.interfaces.utility import IdentityInterface

from nipype.interfaces import fsl

from nilearn import plotting

from IPython.display import Image

import os

from os.path import join as opj

import matplotlib.pyplot as plt

import numpy as np

import nibabel as nib

# Create directory for all the outputs (if it doesn't exist yet)

! [ ! -d output ] && mkdir output

3.1. Interfaces : The core pieces of Nipype#

Python wrapper around a particular piece of software (even if it is written in another programming language than python):

- FSL

- AFNI

- ANTS

- FreeSurfer

- SPM

- dcm2nii

- Nipy

- MNE

- DIPY

- ...

Such an interface knows what sort of options an external program has and how to execute it (e.g., keeps track of the inputs and outputs, and checks their expected types).

In the Nipype framework we can get an information page on an interface class by using the help() function.

Example: Interface for FSL’s Brain Extraction Tool BET#

# help() function to get a general explanation of the class as well as a list of possible (mandatory and optional) input and output parameters

fsl.BET.help()

Wraps the executable command ``bet``.

FSL BET wrapper for skull stripping

For complete details, see the `BET Documentation.

<https://fsl.fmrib.ox.ac.uk/fsl/fslwiki/BET/UserGuide>`_

Examples

--------

>>> from nipype.interfaces import fsl

>>> btr = fsl.BET()

>>> btr.inputs.in_file = 'structural.nii'

>>> btr.inputs.frac = 0.7

>>> btr.inputs.out_file = 'brain_anat.nii'

>>> btr.cmdline

'bet structural.nii brain_anat.nii -f 0.70'

>>> res = btr.run() # doctest: +SKIP

Inputs::

[Mandatory]

in_file: (a pathlike object or string representing an existing file)

input file to skull strip

argument: ``%s``, position: 0

[Optional]

out_file: (a pathlike object or string representing a file)

name of output skull stripped image

argument: ``%s``, position: 1

outline: (a boolean)

create surface outline image

argument: ``-o``

mask: (a boolean)

create binary mask image

argument: ``-m``

skull: (a boolean)

create skull image

argument: ``-s``

no_output: (a boolean)

Don't generate segmented output

argument: ``-n``

frac: (a float)

fractional intensity threshold

argument: ``-f %.2f``

vertical_gradient: (a float)

vertical gradient in fractional intensity threshold (-1, 1)

argument: ``-g %.2f``

radius: (an integer)

head radius

argument: ``-r %d``

center: (a list of at most 3 items which are an integer)

center of gravity in voxels

argument: ``-c %s``

threshold: (a boolean)

apply thresholding to segmented brain image and mask

argument: ``-t``

mesh: (a boolean)

generate a vtk mesh brain surface

argument: ``-e``

robust: (a boolean)

robust brain centre estimation (iterates BET several times)

argument: ``-R``

mutually_exclusive: functional, reduce_bias, robust, padding,

remove_eyes, surfaces, t2_guided

padding: (a boolean)

improve BET if FOV is very small in Z (by temporarily padding end

slices)

argument: ``-Z``

mutually_exclusive: functional, reduce_bias, robust, padding,

remove_eyes, surfaces, t2_guided

remove_eyes: (a boolean)

eye & optic nerve cleanup (can be useful in SIENA)

argument: ``-S``

mutually_exclusive: functional, reduce_bias, robust, padding,

remove_eyes, surfaces, t2_guided

surfaces: (a boolean)

run bet2 and then betsurf to get additional skull and scalp surfaces

(includes registrations)

argument: ``-A``

mutually_exclusive: functional, reduce_bias, robust, padding,

remove_eyes, surfaces, t2_guided

t2_guided: (a pathlike object or string representing a file)

as with creating surfaces, when also feeding in non-brain-extracted

T2 (includes registrations)

argument: ``-A2 %s``

mutually_exclusive: functional, reduce_bias, robust, padding,

remove_eyes, surfaces, t2_guided

functional: (a boolean)

apply to 4D fMRI data

argument: ``-F``

mutually_exclusive: functional, reduce_bias, robust, padding,

remove_eyes, surfaces, t2_guided

reduce_bias: (a boolean)

bias field and neck cleanup

argument: ``-B``

mutually_exclusive: functional, reduce_bias, robust, padding,

remove_eyes, surfaces, t2_guided

output_type: ('NIFTI' or 'NIFTI_PAIR' or 'NIFTI_GZ' or

'NIFTI_PAIR_GZ')

FSL output type

args: (a string)

Additional parameters to the command

argument: ``%s``

environ: (a dictionary with keys which are a bytes or None or a value

of class 'str' and with values which are a bytes or None or a

value of class 'str', nipype default value: {})

Environment variables

Outputs::

out_file: (a pathlike object or string representing a file)

path/name of skullstripped file (if generated)

mask_file: (a pathlike object or string representing a file)

path/name of binary brain mask (if generated)

outline_file: (a pathlike object or string representing a file)

path/name of outline file (if generated)

meshfile: (a pathlike object or string representing a file)

path/name of vtk mesh file (if generated)

inskull_mask_file: (a pathlike object or string representing a file)

path/name of inskull mask (if generated)

inskull_mesh_file: (a pathlike object or string representing a file)

path/name of inskull mesh outline (if generated)

outskull_mask_file: (a pathlike object or string representing a file)

path/name of outskull mask (if generated)

outskull_mesh_file: (a pathlike object or string representing a file)

path/name of outskull mesh outline (if generated)

outskin_mask_file: (a pathlike object or string representing a file)

path/name of outskin mask (if generated)

outskin_mesh_file: (a pathlike object or string representing a file)

path/name of outskin mesh outline (if generated)

skull_mask_file: (a pathlike object or string representing a file)

path/name of skull mask (if generated)

skull_file: (a pathlike object or string representing a file)

path/name of skull file (if generated)

References:

-----------

None

# Create an instance of the fsl.BET object

skullstrip = fsl.BET()

# Set input (and output)

skullstrip.inputs.in_file = 'ds000102/sub-01/anat/sub-01_T1w.nii.gz'

skullstrip.inputs.out_file = 'output/T1w_nipype_bet.nii.gz' # Interfaces by default spit out results to the local directory why relative paths work (outputs are not stored in temporary files like in Nodes/Workflow)

# Execute the node and shows outputs

res = skullstrip.run()

res.outputs

inskull_mask_file = <undefined>

inskull_mesh_file = <undefined>

mask_file = <undefined>

meshfile = <undefined>

out_file = /storage/tmp/tmpm_b9rmze/output/T1w_nipype_bet.nii.gz

outline_file = <undefined>

outskin_mask_file = <undefined>

outskin_mesh_file = <undefined>

outskull_mask_file = <undefined>

outskull_mesh_file = <undefined>

skull_file = <undefined>

skull_mask_file = <undefined>

# Gives you transparency to what's happening under the hood with one additional line

skullstrip.cmdline

'bet ds000102/sub-01/anat/sub-01_T1w.nii.gz output/T1w_nipype_bet.nii.gz'

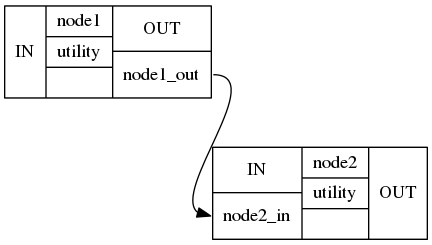

3.2. Nodes: The light wrapper around interfaces#

To streamline the analysis and to execute multiple interfaces in a sensible order, they need to be put in a Node.

A node is an object that executes a certain function: Nipype interface, a user-specified function or an external script.

Each node consists of a name, an interface category and at least one input field, and at least one output field.

\(\rightarrow\) Nodes expose inputs and outputs of the Interface as its own and add additional functionality allowing to connect Nodes into a Workflow (directed graph):

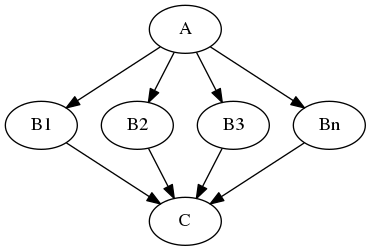

MapNode#

Quite similar to a normal Node, but it can take a list of inputs and operate over each input separately, ultimately returning a list of outputs.

Example: Multiple functional images (A) and each of them should be motion corrected (B1, B2, B3,..). Afterwards, put them all together into a GLM, i.e. the input for the GLM should be an array of [B1, B2, B3, …].

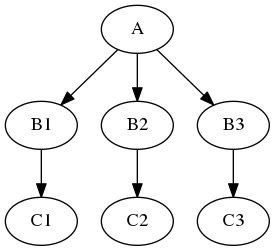

Iterables#

For repetitive steps: Iterables split up the execution workflow into many different branches.

Example: Running the same preprocessing on multiple subjects or doing statistical inference on multiple files.

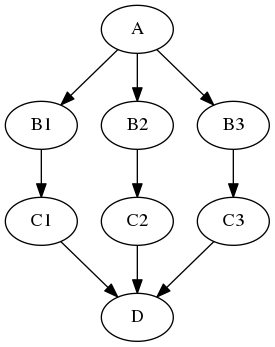

JoinNode#

Has the opposite effect of iterables: JoinNode merges the different branches back into one node.

A JoinNode generalizes MapNode to operate in conjunction with an upstream iterable node to reassemble downstream results, e.g., to merge files into a group level analysis.

Example: Node#

nipype.pipeline.engine.nodes module

nodename = Nodetype(interface_function(), name='labelname')

nodename: Variable name of the node in the python environment.

Nodetype: Type of node: Node, MapNode or JoinNode.

interface_function: Function the node should execute. Can be user specific or coming from an Interface.

labelname: Label name of the node in the workflow environment (defines the name of the working directory).

To execute a node, apply the

.run()methodTo return the output fields of the underlying interface, use

.outputsTo get help,

.help()prints the interface help

The specification of base_dir is very important (and is why we needed to use absolute paths above) because otherwise all the outputs would be saved somewhere in the temporary files. Unlike interfaces, which by default spit out results to the local directly, the Workflow engine executes things off in its own directory hierarchy.

# Create FSL BET Node with fractional intensity threshold of 0.3 and create a binary mask image

# For reasons that will become clear in the Workflow section, it's important to pass filenames to Nodes as absolute paths.

input_file = opj(os.getcwd(), 'ds000102/sub-01/anat/sub-01_T1w.nii.gz')

output_file = opj(os.getcwd(), 'output/T1w_nipype_bet.nii.gz')

# Create FSL BET Node with fractional intensity threshold of 0.3 and create a binary mask image

bet = Node(fsl.BET(), name='bet_node')

# Define inputs

bet.inputs.frac = 0.3

bet.inputs.mask = True

bet.inputs.in_file = input_file

bet.inputs.out_file = output_file

# Run the node

res = bet.run()

241017-00:28:50,371 nipype.workflow INFO:

[Node] Setting-up "bet_node" in "/storage/tmp/tmp8zg_43os/bet_node".

241017-00:28:50,378 nipype.workflow INFO:

[Node] Executing "bet_node" <nipype.interfaces.fsl.preprocess.BET>

241017-00:28:51,106 nipype.interface INFO:

stdout 2024-10-17T00:28:51.106456:

241017-00:28:51,108 nipype.interface INFO:

stdout 2024-10-17T00:28:51.106456:Error: input image sub-01_T1w not valid

241017-00:28:51,113 nipype.interface INFO:

stdout 2024-10-17T00:28:51.106456:

241017-00:28:51,260 nipype.workflow INFO:

[Node] Finished "bet_node", elapsed time 0.879855s.

241017-00:28:51,267 nipype.workflow WARNING:

[Node] Error on "bet_node" (/storage/tmp/tmp8zg_43os/bet_node)

---------------------------------------------------------------------------

NodeExecutionError Traceback (most recent call last)

Cell In[14], line 17

14 bet.inputs.out_file = output_file

16 # Run the node

---> 17 res = bet.run()

File ~/.local/lib/python3.10/site-packages/nipype/pipeline/engine/nodes.py:527, in Node.run(self, updatehash)

524 savepkl(op.join(outdir, "_inputs.pklz"), self.inputs.get_traitsfree())

526 try:

--> 527 result = self._run_interface(execute=True)

528 except Exception:

529 logger.warning('[Node] Error on "%s" (%s)', self.fullname, outdir)

File ~/.local/lib/python3.10/site-packages/nipype/pipeline/engine/nodes.py:645, in Node._run_interface(self, execute, updatehash)

643 self._update_hash()

644 return self._load_results()

--> 645 return self._run_command(execute)

File ~/.local/lib/python3.10/site-packages/nipype/pipeline/engine/nodes.py:771, in Node._run_command(self, execute, copyfiles)

769 # Always pass along the traceback

770 msg += f"Traceback:\n{_tab(runtime.traceback)}"

--> 771 raise NodeExecutionError(msg)

773 return result

NodeExecutionError: Exception raised while executing Node bet_node.

Cmdline:

bet sub-01_T1w.nii.gz /storage/tmp/tmpm_b9rmze/output/T1w_nipype_bet.nii.gz -f 0.30 -m

Stdout:

Error: input image sub-01_T1w not valid

Stderr:

Traceback:

RuntimeError: subprocess exited with code 1.

# Shows produced outputs

res.outputs

inskull_mask_file = <undefined>

inskull_mesh_file = <undefined>

mask_file = <undefined>

meshfile = <undefined>

out_file = /storage/tmp/tmpm_b9rmze/output/T1w_nipype_bet.nii.gz

outline_file = <undefined>

outskin_mask_file = <undefined>

outskin_mesh_file = <undefined>

outskull_mask_file = <undefined>

outskull_mesh_file = <undefined>

skull_file = <undefined>

skull_mask_file = <undefined>

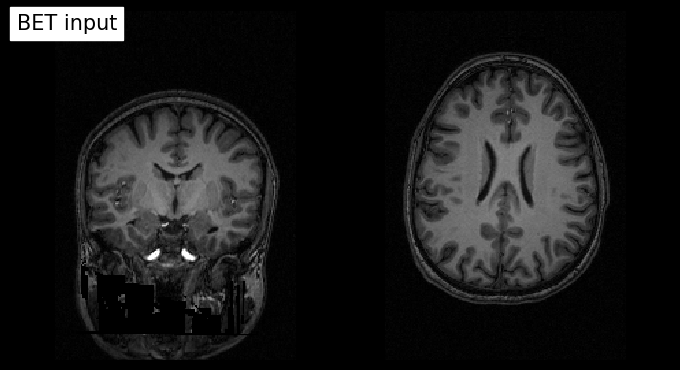

# Plot original input file

plotting.plot_anat(input_file, title='BET input', cut_coords=(10,10,10),

display_mode='ortho', dim=-1, draw_cross=False, annotate=False);

# Plot skullstripped output file (out_file) through the outputs property

plotting.plot_anat(res.outputs.out_file, title='BET output', cut_coords=(10,10,10),

display_mode='ortho', dim=-1, draw_cross=False, annotate=False);

3.3. Workflows#

Define functionality for pipelined execution of interfaces

Consist of multiple nodes, each representing a specific interface.

The processing stream is encoded as a directed acyclic graph (DAG), where each stage of processing is a node. Nodes are unidirectionally dependent on others, ensuring no cycles and clear directionality. The Node and Workflow classes make these relationships explicit.

Edges represent the data flow between nodes.

Control the setup and the execution of individual interfaces.

Will take care of inputs and outputs of each interface and arrange the execution of each interface in the most efficient way.

nipype.pipeline.engine.workflows module

Workflow(name, base_dir=None)

name: Label name of the workflow.

base_dir: Defines the working directory for this instance of workflow element. Unlike interfaces, which by default store results in the local directory, the Workflow engine executes things off in its own directory hierarchy. By default (if not set manually), it is a temporary directory (/tmp).

Workflow methods that we will use during this tutorial:

Workflow.connect(): Connect nodes in the pipelineWorkflow.write_graph(): Generates a graphviz dot file and a png fileWorkflow.run(): Execute the workflow

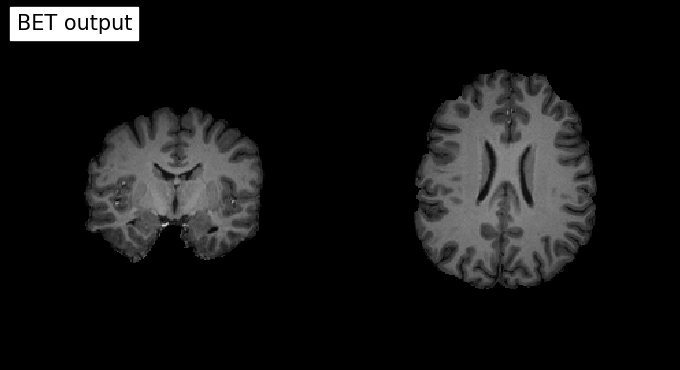

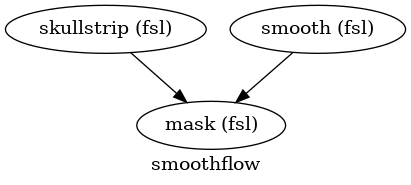

Example: Workflow#

First, define different nodes to:

Skullstrip an image to obtain a mask

Smooth the original image

Mask the smoothed image

in_file = input_file # See node example

# Skullstrip process

skullstrip = Node(fsl.BET(in_file=in_file, mask=True), name="skullstrip")

# Smooth process

smooth = Node(fsl.IsotropicSmooth(in_file=in_file, fwhm=4), name="smooth")

# Mask process

mask = Node(fsl.ApplyMask(), name="mask")

# Create a working directory for all workflows created during this workshop

! [ ! -d output/working_dir ] && mkdir output/working_dir

wf_work_dir = opj(os.getcwd(), 'output/working_dir')

# Initiation of a workflow with specifying the working directory.

# This specification of base_dir is very important (and is why we needed to use absolute paths above for the input files) because otherwise all the outputs would be saved somewhere in the temporary files.

wf = Workflow(name="smoothflow", base_dir=wf_work_dir )

Connect nodes within a workflow#

method called

connectthat is going to do most of the workchecks if inputs and outputs are actually provided by the nodes that are being connected

\(\rightarrow\) There are two different ways to call connect:

Establish one connection at a time:

wf.connect(source, "source_output", dest, "dest_input")

Establish multiple connections between two nodes at once:

wf.connect([(source, dest, [("source_output1", "dest_input1"),

("source_output2", "dest_input2")

])

])

# Option 1: connect the binary mask of the skullstripping process to the mask node

wf.connect(skullstrip, "mask_file", mask, "mask_file")

# Option 2: connect the output of the smoothing node to the input of the masking node

wf.connect([(smooth, mask, [("out_file", "in_file")])])

# Explore the workflow visually

wf.write_graph("workflow_graph.dot")

Image(filename=opj(wf_work_dir,"smoothflow/workflow_graph.png"))

241017-00:28:56,873 nipype.workflow INFO:

Generated workflow graph: /storage/tmp/tmpm_b9rmze/output/working_dir/smoothflow/workflow_graph.png (graph2use=hierarchical, simple_form=True).

# Certain graph types also allow you to further inspect the individual connections between the nodes

wf.write_graph(graph2use='flat')

Image(filename=opj(wf_work_dir,"smoothflow/graph_detailed.png"))

241017-00:28:57,444 nipype.workflow INFO:

Generated workflow graph: /storage/tmp/tmpm_b9rmze/output/working_dir/smoothflow/graph.png (graph2use=flat, simple_form=True).

# Execute the workflow (running serially here)

wf.run()

241017-00:28:57,475 nipype.workflow INFO:

Workflow smoothflow settings: ['check', 'execution', 'logging', 'monitoring']

241017-00:28:57,482 nipype.workflow INFO:

Running serially.

241017-00:28:57,483 nipype.workflow INFO:

[Node] Setting-up "smoothflow.skullstrip" in "/storage/tmp/tmpm_b9rmze/output/working_dir/smoothflow/skullstrip".

241017-00:28:57,488 nipype.workflow INFO:

[Node] Executing "skullstrip" <nipype.interfaces.fsl.preprocess.BET>

241017-00:28:58,42 nipype.interface INFO:

stdout 2024-10-17T00:28:58.042451:

241017-00:28:58,44 nipype.interface INFO:

stdout 2024-10-17T00:28:58.042451:Error: input image sub-01_T1w not valid

241017-00:28:58,45 nipype.interface INFO:

stdout 2024-10-17T00:28:58.042451:

241017-00:28:58,269 nipype.workflow INFO:

[Node] Finished "skullstrip", elapsed time 0.778167s.

241017-00:28:58,273 nipype.workflow WARNING:

[Node] Error on "smoothflow.skullstrip" (/storage/tmp/tmpm_b9rmze/output/working_dir/smoothflow/skullstrip)

241017-00:28:58,277 nipype.workflow ERROR:

Node skullstrip failed to run on host github-action-runner.

241017-00:28:58,279 nipype.workflow ERROR:

Saving crash info to /storage/tmp/tmpm_b9rmze/crash-20241017-002858-ubuntu-skullstrip-b39b7d57-59cd-4d0c-91af-c3eaade38f4a.pklz

Traceback (most recent call last):

File "/home/ubuntu/.local/lib/python3.10/site-packages/nipype/pipeline/plugins/linear.py", line 47, in run

node.run(updatehash=updatehash)

File "/home/ubuntu/.local/lib/python3.10/site-packages/nipype/pipeline/engine/nodes.py", line 527, in run

result = self._run_interface(execute=True)

File "/home/ubuntu/.local/lib/python3.10/site-packages/nipype/pipeline/engine/nodes.py", line 645, in _run_interface

return self._run_command(execute)

File "/home/ubuntu/.local/lib/python3.10/site-packages/nipype/pipeline/engine/nodes.py", line 771, in _run_command

raise NodeExecutionError(msg)

nipype.pipeline.engine.nodes.NodeExecutionError: Exception raised while executing Node skullstrip.

Cmdline:

bet sub-01_T1w.nii.gz sub-01_T1w_brain.nii.gz -m

Stdout:

Error: input image sub-01_T1w not valid

Stderr:

Traceback:

RuntimeError: subprocess exited with code 1.

241017-00:28:58,282 nipype.workflow INFO:

[Node] Setting-up "smoothflow.smooth" in "/storage/tmp/tmpm_b9rmze/output/working_dir/smoothflow/smooth".

241017-00:28:58,286 nipype.workflow INFO:

[Node] Executing "smooth" <nipype.interfaces.fsl.maths.IsotropicSmooth>

241017-00:28:58,697 nipype.interface INFO:

stderr 2024-10-17T00:28:58.697242:Image Exception : #63 :: No image files match: /storage/tmp/tmpm_b9rmze/ds000102/sub-01/anat/sub-01_T1w

241017-00:28:58,699 nipype.interface INFO:

stderr 2024-10-17T00:28:58.699569:terminate called after throwing an instance of 'std::runtime_error'

241017-00:28:58,703 nipype.interface INFO:

stderr 2024-10-17T00:28:58.699569: what(): No image files match: /storage/tmp/tmpm_b9rmze/ds000102/sub-01/anat/sub-01_T1w

241017-00:28:59,63 nipype.interface INFO:

stderr 2024-10-17T00:28:59.062847:/cvmfs/neurodesk.ardc.edu.au/containers/fsl_6.0.7.4_20231005/fslmaths: line 3: 3571752 Aborted (core dumped) singularity --silent exec $neurodesk_singularity_opts --pwd "$PWD" /cvmfs/neurodesk.ardc.edu.au/containers/fsl_6.0.7.4_20231005/fsl_6.0.7.4_20231005.simg fslmaths "$@"

241017-00:28:59,267 nipype.workflow INFO:

[Node] Finished "smooth", elapsed time 0.97755s.

241017-00:28:59,271 nipype.workflow WARNING:

[Node] Error on "smoothflow.smooth" (/storage/tmp/tmpm_b9rmze/output/working_dir/smoothflow/smooth)

241017-00:28:59,273 nipype.workflow ERROR:

Node smooth failed to run on host github-action-runner.

241017-00:28:59,274 nipype.workflow ERROR:

Saving crash info to /storage/tmp/tmpm_b9rmze/crash-20241017-002859-ubuntu-smooth-20979568-bbbe-4f82-8a87-e1315b14774d.pklz

Traceback (most recent call last):

File "/home/ubuntu/.local/lib/python3.10/site-packages/nipype/pipeline/plugins/linear.py", line 47, in run

node.run(updatehash=updatehash)

File "/home/ubuntu/.local/lib/python3.10/site-packages/nipype/pipeline/engine/nodes.py", line 527, in run

result = self._run_interface(execute=True)

File "/home/ubuntu/.local/lib/python3.10/site-packages/nipype/pipeline/engine/nodes.py", line 645, in _run_interface

return self._run_command(execute)

File "/home/ubuntu/.local/lib/python3.10/site-packages/nipype/pipeline/engine/nodes.py", line 771, in _run_command

raise NodeExecutionError(msg)

nipype.pipeline.engine.nodes.NodeExecutionError: Exception raised while executing Node smooth.

Cmdline:

fslmaths /storage/tmp/tmpm_b9rmze/ds000102/sub-01/anat/sub-01_T1w.nii.gz -s 1.69864 /storage/tmp/tmpm_b9rmze/output/working_dir/smoothflow/smooth/sub-01_T1w_smooth.nii.gz

Stdout:

Stderr:

Image Exception : #63 :: No image files match: /storage/tmp/tmpm_b9rmze/ds000102/sub-01/anat/sub-01_T1w

terminate called after throwing an instance of 'std::runtime_error'

what(): No image files match: /storage/tmp/tmpm_b9rmze/ds000102/sub-01/anat/sub-01_T1w

/cvmfs/neurodesk.ardc.edu.au/containers/fsl_6.0.7.4_20231005/fslmaths: line 3: 3571752 Aborted (core dumped) singularity --silent exec $neurodesk_singularity_opts --pwd "$PWD" /cvmfs/neurodesk.ardc.edu.au/containers/fsl_6.0.7.4_20231005/fsl_6.0.7.4_20231005.simg fslmaths "$@"

Traceback:

RuntimeError: subprocess exited with code 134.

241017-00:28:59,277 nipype.workflow INFO:

***********************************

241017-00:28:59,278 nipype.workflow ERROR:

could not run node: smoothflow.skullstrip

241017-00:28:59,279 nipype.workflow INFO:

crashfile: /storage/tmp/tmpm_b9rmze/crash-20241017-002858-ubuntu-skullstrip-b39b7d57-59cd-4d0c-91af-c3eaade38f4a.pklz

241017-00:28:59,280 nipype.workflow ERROR:

could not run node: smoothflow.smooth

241017-00:28:59,281 nipype.workflow INFO:

crashfile: /storage/tmp/tmpm_b9rmze/crash-20241017-002859-ubuntu-smooth-20979568-bbbe-4f82-8a87-e1315b14774d.pklz

241017-00:28:59,282 nipype.workflow INFO:

***********************************

---------------------------------------------------------------------------

NodeExecutionError Traceback (most recent call last)

File ~/.local/lib/python3.10/site-packages/nipype/pipeline/plugins/linear.py:47, in LinearPlugin.run(self, graph, config, updatehash)

46 self._status_callback(node, "start")

---> 47 node.run(updatehash=updatehash)

48 except Exception as exc:

File ~/.local/lib/python3.10/site-packages/nipype/pipeline/engine/nodes.py:527, in Node.run(self, updatehash)

526 try:

--> 527 result = self._run_interface(execute=True)

528 except Exception:

File ~/.local/lib/python3.10/site-packages/nipype/pipeline/engine/nodes.py:645, in Node._run_interface(self, execute, updatehash)

644 return self._load_results()

--> 645 return self._run_command(execute)

File ~/.local/lib/python3.10/site-packages/nipype/pipeline/engine/nodes.py:771, in Node._run_command(self, execute, copyfiles)

770 msg += f"Traceback:\n{_tab(runtime.traceback)}"

--> 771 raise NodeExecutionError(msg)

773 return result

NodeExecutionError: Exception raised while executing Node skullstrip.

Cmdline:

bet sub-01_T1w.nii.gz sub-01_T1w_brain.nii.gz -m

Stdout:

Error: input image sub-01_T1w not valid

Stderr:

Traceback:

RuntimeError: subprocess exited with code 1.

The above exception was the direct cause of the following exception:

RuntimeError Traceback (most recent call last)

Cell In[22], line 2

1 # Execute the workflow (running serially here)

----> 2 wf.run()

File ~/.local/lib/python3.10/site-packages/nipype/pipeline/engine/workflows.py:638, in Workflow.run(self, plugin, plugin_args, updatehash)

636 if str2bool(self.config["execution"]["create_report"]):

637 self._write_report_info(self.base_dir, self.name, execgraph)

--> 638 runner.run(execgraph, updatehash=updatehash, config=self.config)

639 datestr = datetime.utcnow().strftime("%Y%m%dT%H%M%S")

640 if str2bool(self.config["execution"]["write_provenance"]):

File ~/.local/lib/python3.10/site-packages/nipype/pipeline/plugins/linear.py:82, in LinearPlugin.run(self, graph, config, updatehash)

76 if len(errors) > 1:

77 error, cause = (

78 RuntimeError(f"{len(errors)} raised. Re-raising first."),

79 error,

80 )

---> 82 raise error from cause

RuntimeError: 2 raised. Re-raising first.

# Check the working directories of the workflow

!tree output/working_dir/smoothflow/ -I '*js|*json|*html|*pklz|_report'

output/working_dir/smoothflow/

├── graph_detailed.dot

├── graph_detailed.png

├── graph.dot

├── graph.png

├── skullstrip

│ ├── command.txt

│ └── sub-01_T1w.nii.gz -> /storage/tmp/tmpm_b9rmze/ds000102/sub-01/anat/sub-01_T1w.nii.gz

├── smooth

│ └── command.txt

├── workflow_graph.dot

└── workflow_graph.png

2 directories, 9 files

# Helper function to plot 3D NIfTI images

def plot_slice(fname):

# Load the image

img = nib.load(fname)

data = img.get_fdata()

# Cut in the middle of the brain

cut = int(data.shape[-1]/2) + 10

# Plot the data

plt.imshow(np.rot90(data[..., cut]), cmap="gray")

plt.gca().set_axis_off()

f = plt.figure(figsize=(12, 4))

for i, img in enumerate([input_file,

opj(wf_work_dir, "smoothflow/smooth/sub-01_T1w_smooth.nii.gz"),

opj(wf_work_dir, "smoothflow/skullstrip/sub-01_T1w_brain_mask.nii.gz"),

opj(wf_work_dir, "smoothflow/mask/sub-01_T1w_smooth_masked.nii.gz")]):

f.add_subplot(1, 4, i + 1)

plot_slice(img)

---------------------------------------------------------------------------

FileNotFoundError Traceback (most recent call last)

File ~/.local/lib/python3.10/site-packages/nibabel/loadsave.py:100, in load(filename, **kwargs)

99 try:

--> 100 stat_result = os.stat(filename)

101 except OSError:

FileNotFoundError: [Errno 2] No such file or directory: '/storage/tmp/tmpm_b9rmze/output/working_dir/smoothflow/smooth/sub-01_T1w_smooth.nii.gz'

During handling of the above exception, another exception occurred:

FileNotFoundError Traceback (most recent call last)

Cell In[25], line 7

2 for i, img in enumerate([input_file,

3 opj(wf_work_dir, "smoothflow/smooth/sub-01_T1w_smooth.nii.gz"),

4 opj(wf_work_dir, "smoothflow/skullstrip/sub-01_T1w_brain_mask.nii.gz"),

5 opj(wf_work_dir, "smoothflow/mask/sub-01_T1w_smooth_masked.nii.gz")]):

6 f.add_subplot(1, 4, i + 1)

----> 7 plot_slice(img)

Cell In[24], line 5, in plot_slice(fname)

2 def plot_slice(fname):

3

4 # Load the image

----> 5 img = nib.load(fname)

6 data = img.get_fdata()

8 # Cut in the middle of the brain

File ~/.local/lib/python3.10/site-packages/nibabel/loadsave.py:102, in load(filename, **kwargs)

100 stat_result = os.stat(filename)

101 except OSError:

--> 102 raise FileNotFoundError(f"No such file or no access: '{filename}'")

103 if stat_result.st_size <= 0:

104 raise ImageFileError(f"Empty file: '{filename}'")

FileNotFoundError: No such file or no access: '/storage/tmp/tmpm_b9rmze/output/working_dir/smoothflow/smooth/sub-01_T1w_smooth.nii.gz'

3.4. Execution Plugins: Execution on different systems#

Allow seamless execution across many architectures and make using parallel computation quite easy.

Local Machines:

Serial: Runs the workflow one node at a time in a single process locally. The order of the nodes is determined by a topolocial sort of the workflow.

Multicore: Uses the Python multiprocessing library to distribute jobs as new processes on a local system.

Submission to Cluster Schedulers:

Plugins like HTCondor, PBS, SLURM, SGE, OAR, and LSF submit jobs to clusters managed by these job scheduling systems.

Advanced Cluster Integration:

DAGMan: Manages complex workflow dependencies for submission to DAGMan cluster scheduler.

IPython: Utilizes IPython parallel computing capabilities for distributed execution in clusters.

Specialized Execution Plugins:

Soma-Workflow: Integrates with Soma-Workflow system for distributed execution in HPC environments.

Cluster operation often needs a special setup.

All plugins can be executed with:

workflow.run(plugin=PLUGIN_NAME, plugin_args=ARGS_DICT)

To run the workflow one node at a time:

wf.run(plugin='Linear')

To distribute processing on a multicore machine, number of processors/threads will be automatically detected:

wf.run(plugin='MultiProc')

Plugin arguments:

arguments = {'n_procs' : num_threads,

'memory_gb' : num_gb}

wf.run(plugin='MultiProc', plugin_args=arguments)

In order to use Nipype with SLURM simply call:

wf.run(plugin='SLURM')

Optional arguments:

template: If you want to use your own job submission template (the plugin generates a basic one by default).

sbatch_args: Takes any arguments such as nodes/partitions/gres/etc that you would want to pass on to the sbatch command underneath.

jobid_re: Regular expression for custom job submission id search.

3.5. Data Input: First step of every analysis#

Nipype provides many different modules how to get the data into the framework.

We will work through an example with the DataGrabber module:

DataGrabber: Versatile input module to retrieve data from a local file system based on user-defined search criteria, including wildcard patterns, regular expressions, and directory hierarchies. It supports almost any file organization of your data.

But there are many more alternatives available:

SelectFiles: A simpler alternative to the DataGrabber interface, built on Python format strings. Format strings allow you to replace named sections of template strings set off by curly braces ({}).

BIDSDataGrabber: Get neuroimaging data organized in BIDS-compliant directory structures. It simplifies the process of accessing and organizing neuroimaging data for analysis pipelines.

DataFinder: Search for paths that match a given regular expression. Allows a less proscriptive approach to gathering input files compared to DataGrabber.

FreeSurferSource: Specific case of a file grabber that facilitates the data import of outputs from the FreeSurfer recon-all algorithm.

JSONFileGrabber: Datagrabber interface that loads a json file and generates an output for every first-level object.

S3DataGrabber: Pull data from an Amazon S3 Bucket.

SSHDataGrabber: Extension of DataGrabber module that downloads the file list and optionally the files from a SSH server.

XNATSource: Pull data from an XNAT server.

Example: DataGrabber#

Let’s assume we want to grab the anatomical and functional images of certain subjects of the Flanker dataset:

!tree -L 4 ds000102/ -I '*csv|*pdf'

ds000102/

├── CHANGES

├── dataset_description.json

├── derivatives

│ └── mriqc

├── participants.tsv

├── README

├── sub-01

│ ├── anat

│ │ └── sub-01_T1w.nii.gz -> ../../.git/annex/objects/Pf/6k/MD5E-s10581116--757e697a01eeea5c97a7d6fbc7153373.nii.gz/MD5E-s10581116--757e697a01eeea5c97a7d6fbc7153373.nii.gz

│ └── func

│ ├── sub-01_task-flanker_run-1_bold.nii.gz -> ../../.git/annex/objects/5m/w9/MD5E-s28061534--8e8c44ff53f9b5d46f2caae5916fa4ef.nii.gz/MD5E-s28061534--8e8c44ff53f9b5d46f2caae5916fa4ef.nii.gz

│ ├── sub-01_task-flanker_run-1_events.tsv

│ ├── sub-01_task-flanker_run-2_bold.nii.gz -> ../../.git/annex/objects/2F/58/MD5E-s28143286--f0bcf782c3688e2cf7149b4665949484.nii.gz/MD5E-s28143286--f0bcf782c3688e2cf7149b4665949484.nii.gz

│ └── sub-01_task-flanker_run-2_events.tsv

├── sub-02

│ ├── anat

│ │ └── sub-02_T1w.nii.gz -> ../../.git/annex/objects/3m/FF/MD5E-s10737123--cbd4181ee26559e8ec0a441fa2f834a7.nii.gz/MD5E-s10737123--cbd4181ee26559e8ec0a441fa2f834a7.nii.gz

│ └── func

│ ├── sub-02_task-flanker_run-1_bold.nii.gz -> ../../.git/annex/objects/8v/2j/MD5E-s29188378--80050f0deb13562c24f2fc23f8d095bd.nii.gz/MD5E-s29188378--80050f0deb13562c24f2fc23f8d095bd.nii.gz

│ ├── sub-02_task-flanker_run-1_events.tsv

│ ├── sub-02_task-flanker_run-2_bold.nii.gz -> ../../.git/annex/objects/fM/Kw/MD5E-s29193540--cc013f2d7d148b448edca8aada349d02.nii.gz/MD5E-s29193540--cc013f2d7d148b448edca8aada349d02.nii.gz

│ └── sub-02_task-flanker_run-2_events.tsv

├── sub-03

│ ├── anat

│ │ └── sub-03_T1w.nii.gz -> ../../.git/annex/objects/7W/9z/MD5E-s10707026--8f1858934cc7c7457e3a4a71cc2131fc.nii.gz/MD5E-s10707026--8f1858934cc7c7457e3a4a71cc2131fc.nii.gz

│ └── func

│ ├── sub-03_task-flanker_run-1_bold.nii.gz -> ../../.git/annex/objects/q6/kF/MD5E-s28755729--b19466702eee6b9385bd6e19e362f94c.nii.gz/MD5E-s28755729--b19466702eee6b9385bd6e19e362f94c.nii.gz

│ ├── sub-03_task-flanker_run-1_events.tsv

│ ├── sub-03_task-flanker_run-2_bold.nii.gz -> ../../.git/annex/objects/zV/K1/MD5E-s28782544--8d9700a435d08c90f0c1d534efdc8b69.nii.gz/MD5E-s28782544--8d9700a435d08c90f0c1d534efdc8b69.nii.gz

│ └── sub-03_task-flanker_run-2_events.tsv

├── sub-04

│ ├── anat

│ │ └── sub-04_T1w.nii.gz -> ../../.git/annex/objects/FW/14/MD5E-s10738444--2a9a2ba4ea7d2324c84bf5a2882f196c.nii.gz/MD5E-s10738444--2a9a2ba4ea7d2324c84bf5a2882f196c.nii.gz

│ └── func

│ ├── sub-04_task-flanker_run-1_bold.nii.gz -> ../../.git/annex/objects/9Z/0Q/MD5E-s29062799--27171406951ea275cb5857ea0dc32345.nii.gz/MD5E-s29062799--27171406951ea275cb5857ea0dc32345.nii.gz

│ ├── sub-04_task-flanker_run-1_events.tsv

│ ├── sub-04_task-flanker_run-2_bold.nii.gz -> ../../.git/annex/objects/FW/FZ/MD5E-s29071279--f89b61fe3ebab26df1374f2564bd95c2.nii.gz/MD5E-s29071279--f89b61fe3ebab26df1374f2564bd95c2.nii.gz

│ └── sub-04_task-flanker_run-2_events.tsv

├── sub-05

│ ├── anat

│ │ └── sub-05_T1w.nii.gz -> ../../.git/annex/objects/k2/Kj/MD5E-s10753867--c4b5788da5f4c627f0f5862da5f46c35.nii.gz/MD5E-s10753867--c4b5788da5f4c627f0f5862da5f46c35.nii.gz

│ └── func

│ ├── sub-05_task-flanker_run-1_bold.nii.gz -> ../../.git/annex/objects/VZ/z5/MD5E-s29667270--0ce9ac78b6aa9a77fc94c655a6ff5a06.nii.gz/MD5E-s29667270--0ce9ac78b6aa9a77fc94c655a6ff5a06.nii.gz

│ ├── sub-05_task-flanker_run-1_events.tsv

│ ├── sub-05_task-flanker_run-2_bold.nii.gz -> ../../.git/annex/objects/z7/MP/MD5E-s29660544--752750dabb21e2cf28e87d1d550a71b9.nii.gz/MD5E-s29660544--752750dabb21e2cf28e87d1d550a71b9.nii.gz

│ └── sub-05_task-flanker_run-2_events.tsv

├── sub-06

│ ├── anat

│ │ └── sub-06_T1w.nii.gz -> ../../.git/annex/objects/5w/G0/MD5E-s10620585--1132eab3830fe59b8a10b6582bb49004.nii.gz/MD5E-s10620585--1132eab3830fe59b8a10b6582bb49004.nii.gz

│ └── func

│ ├── sub-06_task-flanker_run-1_bold.nii.gz -> ../../.git/annex/objects/3x/qj/MD5E-s29386982--e671c0c647ce7d0d4596e35b702ee970.nii.gz/MD5E-s29386982--e671c0c647ce7d0d4596e35b702ee970.nii.gz

│ ├── sub-06_task-flanker_run-1_events.tsv

│ ├── sub-06_task-flanker_run-2_bold.nii.gz -> ../../.git/annex/objects/9j/6P/MD5E-s29379265--e513a2746d2b5c603f96044cf48c557c.nii.gz/MD5E-s29379265--e513a2746d2b5c603f96044cf48c557c.nii.gz

│ └── sub-06_task-flanker_run-2_events.tsv

├── sub-07

│ ├── anat

│ │ └── sub-07_T1w.nii.gz -> ../../.git/annex/objects/08/fF/MD5E-s10718092--38481fbc489dfb1ec4b174b57591a074.nii.gz/MD5E-s10718092--38481fbc489dfb1ec4b174b57591a074.nii.gz

│ └── func

│ ├── sub-07_task-flanker_run-1_bold.nii.gz -> ../../.git/annex/objects/z1/7W/MD5E-s28946009--5baf7a314874b280543fc0f91f2731af.nii.gz/MD5E-s28946009--5baf7a314874b280543fc0f91f2731af.nii.gz

│ ├── sub-07_task-flanker_run-1_events.tsv

│ ├── sub-07_task-flanker_run-2_bold.nii.gz -> ../../.git/annex/objects/Jf/W7/MD5E-s28960603--682e13963bfc49cc6ae05e9ba5c62619.nii.gz/MD5E-s28960603--682e13963bfc49cc6ae05e9ba5c62619.nii.gz

│ └── sub-07_task-flanker_run-2_events.tsv

├── sub-08

│ ├── anat

│ │ └── sub-08_T1w.nii.gz -> ../../.git/annex/objects/mw/MM/MD5E-s10561256--b94dddd8dc1c146aa8cd97f8d9994146.nii.gz/MD5E-s10561256--b94dddd8dc1c146aa8cd97f8d9994146.nii.gz

│ └── func

│ ├── sub-08_task-flanker_run-1_bold.nii.gz -> ../../.git/annex/objects/zX/v9/MD5E-s28641609--47314e6d1a14b8545686110b5b67f8b8.nii.gz/MD5E-s28641609--47314e6d1a14b8545686110b5b67f8b8.nii.gz

│ ├── sub-08_task-flanker_run-1_events.tsv

│ ├── sub-08_task-flanker_run-2_bold.nii.gz -> ../../.git/annex/objects/WZ/F0/MD5E-s28636310--4535bf26281e1c5556ad0d3468e7fe4e.nii.gz/MD5E-s28636310--4535bf26281e1c5556ad0d3468e7fe4e.nii.gz

│ └── sub-08_task-flanker_run-2_events.tsv

├── sub-09

│ ├── anat

│ │ └── sub-09_T1w.nii.gz -> ../../.git/annex/objects/QJ/ZZ/MD5E-s10775967--e6a18e64bc0a6b17254a9564cf9b8f82.nii.gz/MD5E-s10775967--e6a18e64bc0a6b17254a9564cf9b8f82.nii.gz

│ └── func

│ ├── sub-09_task-flanker_run-1_bold.nii.gz -> ../../.git/annex/objects/k9/1X/MD5E-s29200533--59e86a903e0ab3d1d320c794ba1f0777.nii.gz/MD5E-s29200533--59e86a903e0ab3d1d320c794ba1f0777.nii.gz

│ ├── sub-09_task-flanker_run-1_events.tsv

│ ├── sub-09_task-flanker_run-2_bold.nii.gz -> ../../.git/annex/objects/W3/94/MD5E-s29223017--7f3fb9e260d3bd28e29b0b586ce4c344.nii.gz/MD5E-s29223017--7f3fb9e260d3bd28e29b0b586ce4c344.nii.gz

│ └── sub-09_task-flanker_run-2_events.tsv

├── sub-10

│ ├── anat

│ │ └── sub-10_T1w.nii.gz -> ../../.git/annex/objects/5F/3f/MD5E-s10750712--bde2309077bffe22cb65e42ebdce5bfa.nii.gz/MD5E-s10750712--bde2309077bffe22cb65e42ebdce5bfa.nii.gz

│ └── func

│ ├── sub-10_task-flanker_run-1_bold.nii.gz -> ../../.git/annex/objects/3p/qp/MD5E-s29732696--339715d5cec387f4d44dfe94f304a429.nii.gz/MD5E-s29732696--339715d5cec387f4d44dfe94f304a429.nii.gz

│ ├── sub-10_task-flanker_run-1_events.tsv

│ ├── sub-10_task-flanker_run-2_bold.nii.gz -> ../../.git/annex/objects/11/Zx/MD5E-s29724034--16f2bf452524a315182f188becc1866d.nii.gz/MD5E-s29724034--16f2bf452524a315182f188becc1866d.nii.gz

│ └── sub-10_task-flanker_run-2_events.tsv

├── sub-11

│ ├── anat

│ │ └── sub-11_T1w.nii.gz -> ../../.git/annex/objects/kj/xX/MD5E-s10534963--9e5bff7ec0b5df2850e1d05b1af281ba.nii.gz/MD5E-s10534963--9e5bff7ec0b5df2850e1d05b1af281ba.nii.gz

│ └── func

│ ├── sub-11_task-flanker_run-1_bold.nii.gz -> ../../.git/annex/objects/35/fk/MD5E-s28226875--d5012074c2c7a0a394861b010bcf9a8f.nii.gz/MD5E-s28226875--d5012074c2c7a0a394861b010bcf9a8f.nii.gz

│ ├── sub-11_task-flanker_run-1_events.tsv

│ ├── sub-11_task-flanker_run-2_bold.nii.gz -> ../../.git/annex/objects/j7/ff/MD5E-s28198976--c0a64e3b549568c44bb40b1588027c9a.nii.gz/MD5E-s28198976--c0a64e3b549568c44bb40b1588027c9a.nii.gz

│ └── sub-11_task-flanker_run-2_events.tsv

├── sub-12

│ ├── anat

│ │ └── sub-12_T1w.nii.gz -> ../../.git/annex/objects/kx/2F/MD5E-s10550168--a7f651adc817b6678148b575654532a4.nii.gz/MD5E-s10550168--a7f651adc817b6678148b575654532a4.nii.gz

│ └── func

│ ├── sub-12_task-flanker_run-1_bold.nii.gz -> ../../.git/annex/objects/M0/fX/MD5E-s28403807--f1c3eb2e519020f4315a696ea845fc01.nii.gz/MD5E-s28403807--f1c3eb2e519020f4315a696ea845fc01.nii.gz

│ ├── sub-12_task-flanker_run-1_events.tsv

│ ├── sub-12_task-flanker_run-2_bold.nii.gz -> ../../.git/annex/objects/vW/V0/MD5E-s28424992--8740628349be3c056a0411bf4a852b25.nii.gz/MD5E-s28424992--8740628349be3c056a0411bf4a852b25.nii.gz

│ └── sub-12_task-flanker_run-2_events.tsv

├── sub-13

│ ├── anat

│ │ └── sub-13_T1w.nii.gz -> ../../.git/annex/objects/wM/Xw/MD5E-s10609761--440413c3251d182086105649164222c6.nii.gz/MD5E-s10609761--440413c3251d182086105649164222c6.nii.gz

│ └── func

│ ├── sub-13_task-flanker_run-1_bold.nii.gz -> ../../.git/annex/objects/mf/M4/MD5E-s28180916--aa35f4ad0cf630d6396a8a2dd1f3dda6.nii.gz/MD5E-s28180916--aa35f4ad0cf630d6396a8a2dd1f3dda6.nii.gz

│ ├── sub-13_task-flanker_run-1_events.tsv

│ ├── sub-13_task-flanker_run-2_bold.nii.gz -> ../../.git/annex/objects/XP/76/MD5E-s28202786--8caf1ac548c87b2b35f85e8ae2bf72c1.nii.gz/MD5E-s28202786--8caf1ac548c87b2b35f85e8ae2bf72c1.nii.gz

│ └── sub-13_task-flanker_run-2_events.tsv

├── sub-14

│ ├── anat

│ │ └── sub-14_T1w.nii.gz -> ../../.git/annex/objects/Zw/0z/MD5E-s9223596--33abfb5da565f3487e3a7aebc15f940c.nii.gz/MD5E-s9223596--33abfb5da565f3487e3a7aebc15f940c.nii.gz

│ └── func

│ ├── sub-14_task-flanker_run-1_bold.nii.gz -> ../../.git/annex/objects/Jp/29/MD5E-s29001492--250f1e4daa9be1d95e06af0d56629cc9.nii.gz/MD5E-s29001492--250f1e4daa9be1d95e06af0d56629cc9.nii.gz

│ ├── sub-14_task-flanker_run-1_events.tsv

│ ├── sub-14_task-flanker_run-2_bold.nii.gz -> ../../.git/annex/objects/PK/V2/MD5E-s29068193--5621a3b0af8132c509420b4ad9aaf8fb.nii.gz/MD5E-s29068193--5621a3b0af8132c509420b4ad9aaf8fb.nii.gz

│ └── sub-14_task-flanker_run-2_events.tsv

├── sub-15

│ ├── anat

│ │ └── sub-15_T1w.nii.gz -> ../../.git/annex/objects/Mz/qq/MD5E-s10752891--ddd2622f115ec0d29a0c7ab2366f6f95.nii.gz/MD5E-s10752891--ddd2622f115ec0d29a0c7ab2366f6f95.nii.gz

│ └── func

│ ├── sub-15_task-flanker_run-1_bold.nii.gz -> ../../.git/annex/objects/08/JJ/MD5E-s28285239--feda22c4526af1910fcee58d4c42f07e.nii.gz/MD5E-s28285239--feda22c4526af1910fcee58d4c42f07e.nii.gz

│ ├── sub-15_task-flanker_run-1_events.tsv

│ ├── sub-15_task-flanker_run-2_bold.nii.gz -> ../../.git/annex/objects/9f/0W/MD5E-s28289760--433000a1def662e72d8433dba151c61b.nii.gz/MD5E-s28289760--433000a1def662e72d8433dba151c61b.nii.gz

│ └── sub-15_task-flanker_run-2_events.tsv

├── sub-16

│ ├── anat

│ │ └── sub-16_T1w.nii.gz -> ../../.git/annex/objects/4g/8k/MD5E-s10927450--a196f7075c793328dd6ff3cebf36ea6b.nii.gz/MD5E-s10927450--a196f7075c793328dd6ff3cebf36ea6b.nii.gz

│ └── func

│ ├── sub-16_task-flanker_run-1_bold.nii.gz -> ../../.git/annex/objects/9z/g2/MD5E-s29757991--1a1648b2fa6cc74e31c94f109d8137ba.nii.gz/MD5E-s29757991--1a1648b2fa6cc74e31c94f109d8137ba.nii.gz

│ ├── sub-16_task-flanker_run-1_events.tsv

│ ├── sub-16_task-flanker_run-2_bold.nii.gz -> ../../.git/annex/objects/k8/4F/MD5E-s29773832--fe08739ea816254395b985ee704aaa99.nii.gz/MD5E-s29773832--fe08739ea816254395b985ee704aaa99.nii.gz

│ └── sub-16_task-flanker_run-2_events.tsv

├── sub-17

│ ├── anat

│ │ └── sub-17_T1w.nii.gz -> ../../.git/annex/objects/jQ/MQ/MD5E-s10826014--8e2a6b062df4d1c4327802f2b905ef36.nii.gz/MD5E-s10826014--8e2a6b062df4d1c4327802f2b905ef36.nii.gz

│ └── func

│ ├── sub-17_task-flanker_run-1_bold.nii.gz -> ../../.git/annex/objects/Wz/2P/MD5E-s28991563--9845f461a017a39d1f6e18baaa0c9c41.nii.gz/MD5E-s28991563--9845f461a017a39d1f6e18baaa0c9c41.nii.gz

│ ├── sub-17_task-flanker_run-1_events.tsv

│ ├── sub-17_task-flanker_run-2_bold.nii.gz -> ../../.git/annex/objects/jF/3m/MD5E-s29057821--84ccc041163bcc5b3a9443951e2a5a78.nii.gz/MD5E-s29057821--84ccc041163bcc5b3a9443951e2a5a78.nii.gz

│ └── sub-17_task-flanker_run-2_events.tsv

├── sub-18

│ ├── anat

│ │ └── sub-18_T1w.nii.gz -> ../../.git/annex/objects/3v/pK/MD5E-s10571510--6fc4b5792bc50ea4d14eb5247676fafe.nii.gz/MD5E-s10571510--6fc4b5792bc50ea4d14eb5247676fafe.nii.gz

│ └── func

│ ├── sub-18_task-flanker_run-1_bold.nii.gz -> ../../.git/annex/objects/94/P2/MD5E-s28185776--5b3879ec6fc4bbe1e48efc64984f88cf.nii.gz/MD5E-s28185776--5b3879ec6fc4bbe1e48efc64984f88cf.nii.gz

│ ├── sub-18_task-flanker_run-1_events.tsv

│ ├── sub-18_task-flanker_run-2_bold.nii.gz -> ../../.git/annex/objects/qp/6K/MD5E-s28234699--58019d798a133e5d7806569374dd8160.nii.gz/MD5E-s28234699--58019d798a133e5d7806569374dd8160.nii.gz

│ └── sub-18_task-flanker_run-2_events.tsv

├── sub-19

│ ├── anat

│ │ └── sub-19_T1w.nii.gz -> ../../.git/annex/objects/Zw/p8/MD5E-s8861893--d338005753d8af3f3d7bd8dc293e2a97.nii.gz/MD5E-s8861893--d338005753d8af3f3d7bd8dc293e2a97.nii.gz

│ └── func

│ ├── sub-19_task-flanker_run-1_bold.nii.gz -> ../../.git/annex/objects/04/k6/MD5E-s28178448--3874e748258cf19aa69a05a7c37ad137.nii.gz/MD5E-s28178448--3874e748258cf19aa69a05a7c37ad137.nii.gz

│ ├── sub-19_task-flanker_run-1_events.tsv

│ ├── sub-19_task-flanker_run-2_bold.nii.gz -> ../../.git/annex/objects/mz/P4/MD5E-s28190932--91e6b3e4318ca28f01de8cb967cf8421.nii.gz/MD5E-s28190932--91e6b3e4318ca28f01de8cb967cf8421.nii.gz

│ └── sub-19_task-flanker_run-2_events.tsv

├── sub-20

│ ├── anat

│ │ └── sub-20_T1w.nii.gz -> ../../.git/annex/objects/g1/FF/MD5E-s11025608--5929806a7aa5720fc755687e1450b06c.nii.gz/MD5E-s11025608--5929806a7aa5720fc755687e1450b06c.nii.gz

│ └── func

│ ├── sub-20_task-flanker_run-1_bold.nii.gz -> ../../.git/annex/objects/v5/ZJ/MD5E-s29931631--bf9abb057367ce66961f0b7913e8e707.nii.gz/MD5E-s29931631--bf9abb057367ce66961f0b7913e8e707.nii.gz

│ ├── sub-20_task-flanker_run-1_events.tsv

│ ├── sub-20_task-flanker_run-2_bold.nii.gz -> ../../.git/annex/objects/J3/KW/MD5E-s29945590--96cfd5b77cd096f6c6a3530015fea32d.nii.gz/MD5E-s29945590--96cfd5b77cd096f6c6a3530015fea32d.nii.gz

│ └── sub-20_task-flanker_run-2_events.tsv

├── sub-21

│ ├── anat

│ │ └── sub-21_T1w.nii.gz -> ../../.git/annex/objects/K6/6K/MD5E-s8662805--77b262ddd929fa08d78591bfbe558ac6.nii.gz/MD5E-s8662805--77b262ddd929fa08d78591bfbe558ac6.nii.gz

│ └── func

│ ├── sub-21_task-flanker_run-1_bold.nii.gz -> ../../.git/annex/objects/Wz/p9/MD5E-s28756041--9ae556d4e3042532d25af5dc4ab31840.nii.gz/MD5E-s28756041--9ae556d4e3042532d25af5dc4ab31840.nii.gz

│ ├── sub-21_task-flanker_run-1_events.tsv

│ ├── sub-21_task-flanker_run-2_bold.nii.gz -> ../../.git/annex/objects/xF/M3/MD5E-s28758438--81866411fc6b6333ec382a20ff0be718.nii.gz/MD5E-s28758438--81866411fc6b6333ec382a20ff0be718.nii.gz

│ └── sub-21_task-flanker_run-2_events.tsv

├── sub-22

│ ├── anat

│ │ └── sub-22_T1w.nii.gz -> ../../.git/annex/objects/JG/ZV/MD5E-s9282392--9e7296a6a5b68df46b77836182b6681a.nii.gz/MD5E-s9282392--9e7296a6a5b68df46b77836182b6681a.nii.gz

│ └── func

│ ├── sub-22_task-flanker_run-1_bold.nii.gz -> ../../.git/annex/objects/qW/Gw/MD5E-s28002098--c6bea10177a38667ceea3261a642b3c6.nii.gz/MD5E-s28002098--c6bea10177a38667ceea3261a642b3c6.nii.gz

│ ├── sub-22_task-flanker_run-1_events.tsv

│ ├── sub-22_task-flanker_run-2_bold.nii.gz -> ../../.git/annex/objects/VX/Zj/MD5E-s28027568--b34d0df9ad62485aba25296939429885.nii.gz/MD5E-s28027568--b34d0df9ad62485aba25296939429885.nii.gz

│ └── sub-22_task-flanker_run-2_events.tsv

├── sub-23

│ ├── anat

│ │ └── sub-23_T1w.nii.gz -> ../../.git/annex/objects/4Z/4x/MD5E-s10626062--db5a6ba6730b319c6425f2e847ce9b14.nii.gz/MD5E-s10626062--db5a6ba6730b319c6425f2e847ce9b14.nii.gz

│ └── func

│ ├── sub-23_task-flanker_run-1_bold.nii.gz -> ../../.git/annex/objects/VK/8F/MD5E-s28965005--4a9a96d9322563510ca14439e7fd6cea.nii.gz/MD5E-s28965005--4a9a96d9322563510ca14439e7fd6cea.nii.gz

│ ├── sub-23_task-flanker_run-1_events.tsv

│ ├── sub-23_task-flanker_run-2_bold.nii.gz -> ../../.git/annex/objects/56/20/MD5E-s29050413--753b0d2c23c4af6592501219c2e2c6bd.nii.gz/MD5E-s29050413--753b0d2c23c4af6592501219c2e2c6bd.nii.gz

│ └── sub-23_task-flanker_run-2_events.tsv

├── sub-24

│ ├── anat

│ │ └── sub-24_T1w.nii.gz -> ../../.git/annex/objects/jQ/fV/MD5E-s10739691--458f0046eff18ee8c43456637766a819.nii.gz/MD5E-s10739691--458f0046eff18ee8c43456637766a819.nii.gz

│ └── func

│ ├── sub-24_task-flanker_run-1_bold.nii.gz -> ../../.git/annex/objects/km/fV/MD5E-s29354610--29ebfa60e52d49f7dac6814cb5fdc2bc.nii.gz/MD5E-s29354610--29ebfa60e52d49f7dac6814cb5fdc2bc.nii.gz

│ ├── sub-24_task-flanker_run-1_events.tsv

│ ├── sub-24_task-flanker_run-2_bold.nii.gz -> ../../.git/annex/objects/Wj/KK/MD5E-s29423307--fedaa1d7c6e34420735bb3bbe5a2fe38.nii.gz/MD5E-s29423307--fedaa1d7c6e34420735bb3bbe5a2fe38.nii.gz

│ └── sub-24_task-flanker_run-2_events.tsv

├── sub-25

│ ├── anat

│ │ └── sub-25_T1w.nii.gz -> ../../.git/annex/objects/Gk/FQ/MD5E-s8998578--f560d832f13e757b485c16d570bf6ebc.nii.gz/MD5E-s8998578--f560d832f13e757b485c16d570bf6ebc.nii.gz

│ └── func