Neurodesk Copilot using Local LLMs

Neurodesk Copilot: How to use LLMs for code autocompletion, chat support in Neurodesk ecosystem

Neurodesk Copilot: Using Locally hosted LLMs inside Neurodesk Environment

Configuring LLM Provider and models

Neurodesk Copilot allows you to harness the capabilities of local Large Language Models (LLMs) for code autocompletion and chat-based assistance, directly within your Neurodesk environment. This guide demonstrates how to configure Ollama as your local LLM provider and get started with chat and inline code completion. You can configure the model provider and model options using the Notebook Intelligence Settings dialog. You can access this dialog from JupyterLab Settings menu -> Notebook Intelligence Settings, using /settings command in Copilot Chat or by using the command palette.

Step 1: Choose Ollama and Neurodesk copilot: type /settings in chat interface and choose Ollama and Neurodesk model and save settings

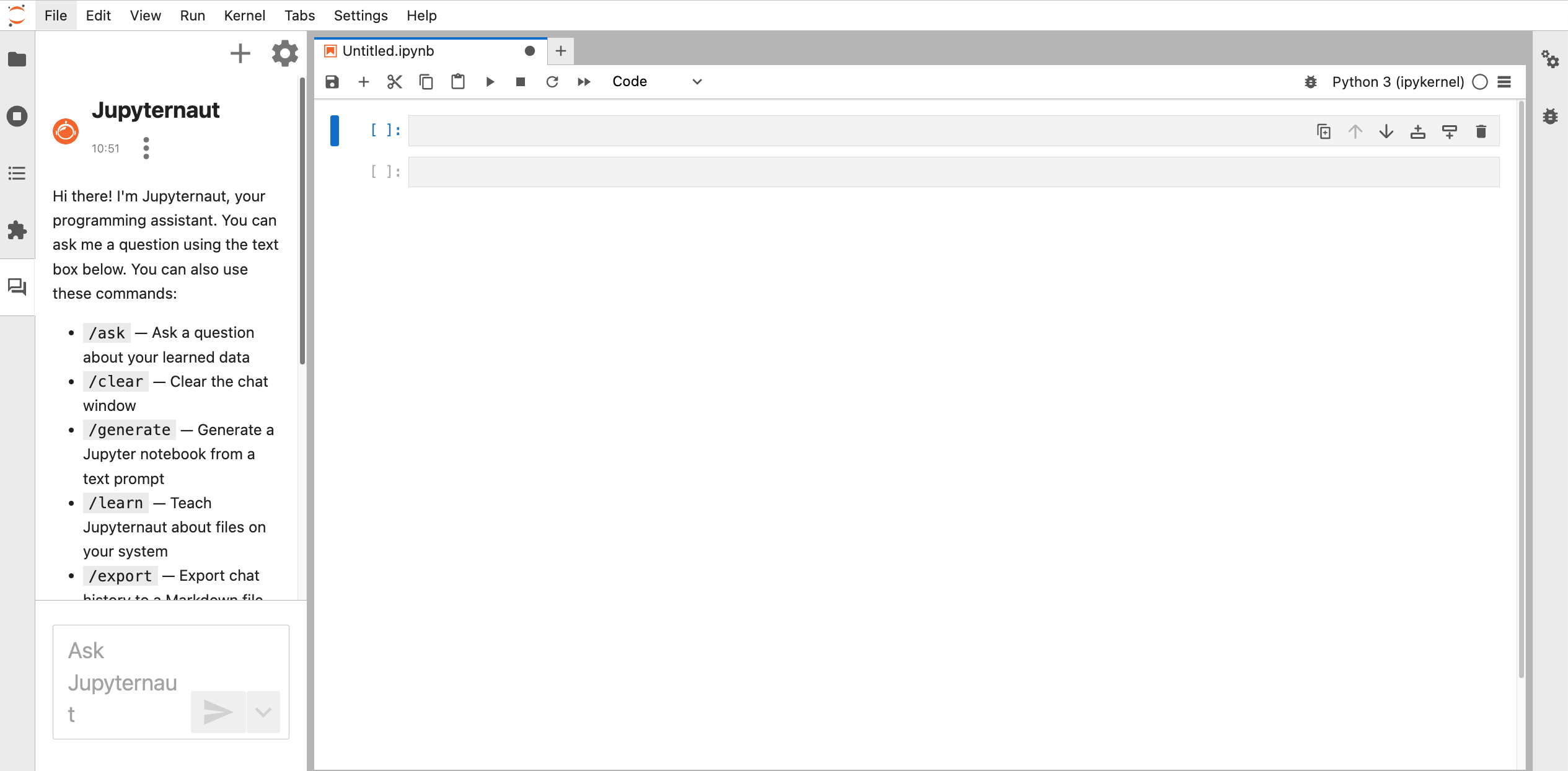

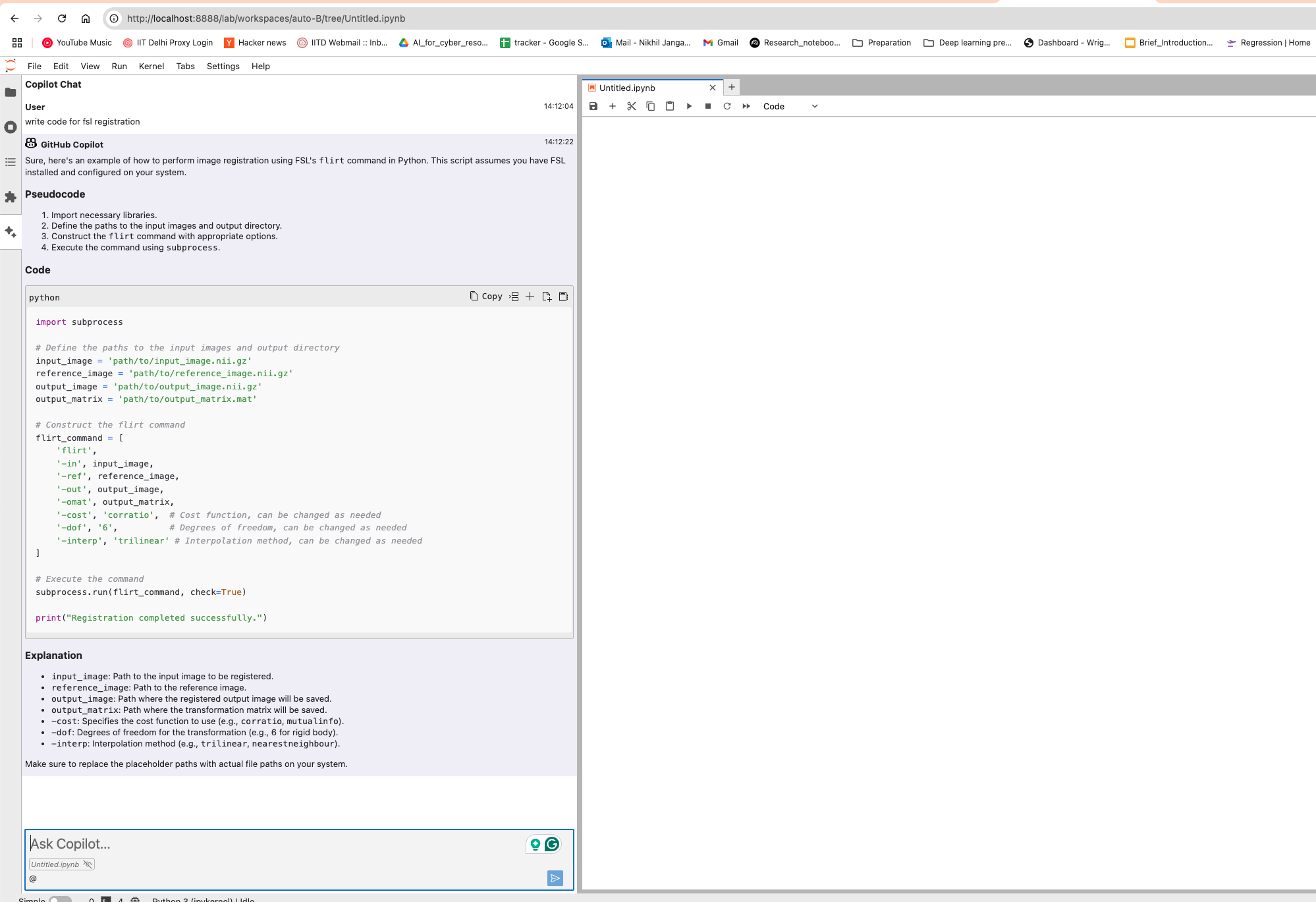

Step 2: Use chat interface

- Open the Chat feature in Neurodesk and type your query or command. Examples:

- “Explain how to import MRI dataset in python.”

- “Help me debug my data-loading function.”

- Press Enter. Neurodesk Copilot will respond with explanations, tips, or suggested code.

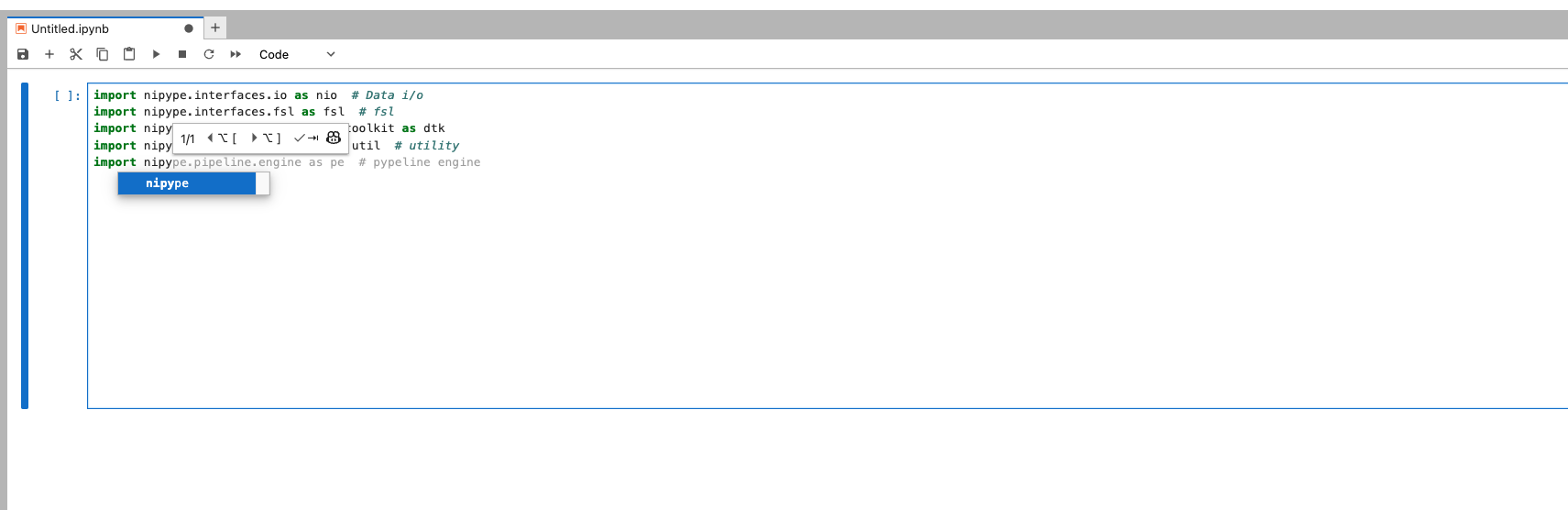

Step 3: Code completion

- Begin typing your code within a cell in Neurodesk. As you type, Copilot provides inline suggestions. You can accept suggestions by pressing Tab key.

- If the suggestion isn’t relevant, continue typing or press Escape to dismiss it.

Feel free to update the settings to disable auto completer to manual invocation in Settings -> Settings Editor -> Inline Completer